In brief

DeepMind’s Gemini Robotics models gave machines the ability to plan, reason, and even look up recycling rules online before acting.

Instead of following scripts, Google’s new AI lets robots adapt, problem-solve, and pass skills between each other.

From packing suitcases to sorting trash, robots powered by Gemini-ER 1.5 showed early steps toward general-purpose intelligence.

Google DeepMind rolled out two AI models this week that aim to make robots smarter than ever. Instead of focusing on following comments, the updated Gemini Robotics 1.5 and its companion Gemini Robotics-ER 1.5 make the robots think through problems, search the internet for information, and pass skills between different robot agents.

According to Google, these models mark a “foundational step that can navigate the complexities of the physical world with intelligence and dexterity”

“Gemini Robotics 1.5 marks an important milestone toward solving AGI in the physical world,” Google said in the announcement. “By introducing agentic capabilities, we’re moving beyond models that react to commands and creating systems that can truly reason, plan, actively use tools, and generalize.”

And this term “generalization” is important because models struggle with it.

The robots powered by these models can now handle tasks like sorting laundry by color, packing a suitcase based on weather forecasts they find online, or checking local recycling rules to throw away trash correctly. Now, as a human, you may say, “Duh, so what?” But to do this, machines require a skill called generalization—the ability to apply knowledge to new situations.

Robots—and algorithms in general—usually struggle with this. For example, if you teach a model to fold a pair of pants, it will not be able to fold a t-shirt unless engineers programmed every step in advance.

The new models change that. They can pick up on cues, read the environment, make reasonable assumptions, and carry out multi-step tasks that used to be out of reach—or at least extremely hard—for machines.

But better doesn’t mean perfect. For example, in one of the experiments, the team showed the robots a set of objects and asked them to send them into the correct trash. The robots used their camera to visually identify each item, pull up San Francisco’s latest recycling guidelines online, and then place them where they should ideally go, all on its own, just as a local human would.

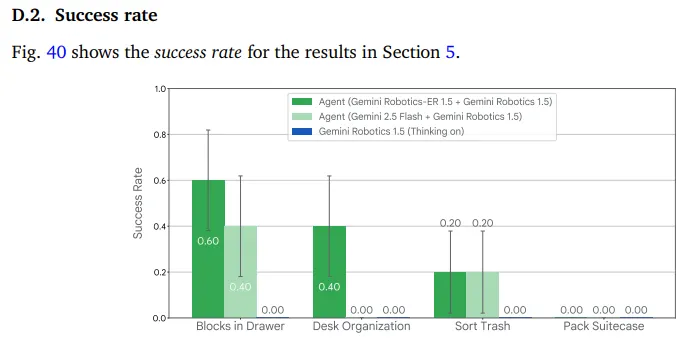

This process combines online search, visual perception, and step-by-step planning—making context-aware decisions that go beyond what older robots could achieve. The registered success rate was between 20% to 40% of the time; not ideal, but surprising for a model that was not able to understand those nuances ever before.

How Google turn robots into super-robots

The two models split the work. Gemini Robotics-ER 1.5 acts like the brain, figuring out what needs to happen and creating a step-by-step plan. It can call up Google Search when it needs information. Once it has a plan, it passes natural language instructions to Gemini Robotics 1.5, which handles the actual physical movements.

More technically speaking, the new Gemini Robotics 1.5 is a vision-language-action (VLA) model that turns visual information and instructions into motor commands, while the new Gemini Robotics-ER 1.5 is a vision-language model (VLM) that creates multistep plans to complete a mission.

When a robot sorts laundry, for instance, it internally reasons through the task using a chain of thought: understanding that “sort by color” means whites go in one bin and colors in another, then breaking down the specific motions needed to pick up each piece of clothing. The robot can explain its reasoning in plain English, making its decisions less of a black box.

Google CEO Sundar Pichai chimed in on X, noting that the new models will enable robots to better reason, plan ahead, use digital tools like search, and transfer learning from one kind of robot to another. He called it Google’s “next big step towards general-purpose robots that are truly helpful.”

The release puts Google in a spotlight shared with developers like Tesla, Figure AI and Boston Dynamics, though each company is taking different approaches. Tesla focuses on mass production for its factories, with Elon Musk promising thousands of units by 2026. Boston Dynamics continues pushing the boundaries of robot athleticism with its backflipping Atlas. Google, meanwhile, bets on AI that makes robots adaptable to any situation without specific programming.

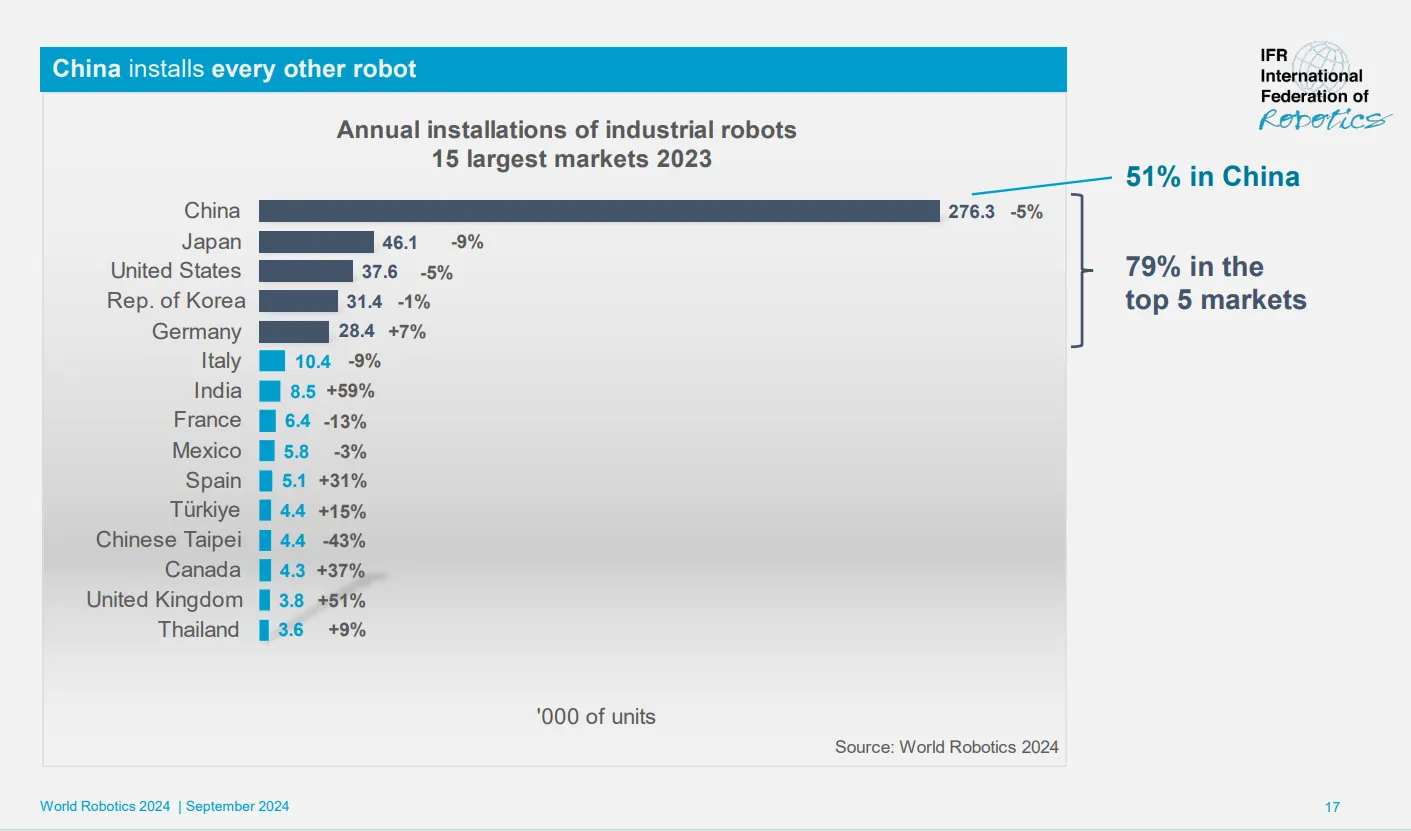

The timing matters. American robotics companies are pushing for a national robotics strategy, including establishing a federal office focused on promoting the industry at a time when China is making AI and intelligent robots a national priority. China is the world’s largest market for robots that work in factories and other industrial environments, with about 1.8 million robots operating in 2023, according to the Germany-based International Federation of Robotics.

DeepMind’s approach differs from traditional robotics programming, where engineers meticulously code every movement. Instead, these models learn from demonstration and can adapt on the fly. If an object slips from a robot’s grasp or someone moves something mid-task, the robot adjusts without missing a beat.

The models build on DeepMind’s earlier work from March, when robots could only handle single tasks like unzipping a bag or folding paper. Now they’re tackling sequences that would challenge many humans—like packing appropriately for a trip after checking the weather forecast.

For developers wanting to experiment, there’s a split approach to availability. Gemini Robotics-ER 1.5 launched Thursday through the Gemini API in Google AI Studio, meaning any developer can start building with the reasoning model. The action model, Gemini Robotics 1.5, remains exclusive to “select” (meaning “rich,” probably) partners.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.