‘This is a foundational step toward building robots that can navigate the complexities of the physical world with intelligence and dexterity,’ said DeepMind’s Carolina Parada.

Google DeepMind has revealed two new robotics AI models that add agentic capabilities such as multi-step processing to robots.

The models – Gemini Robotics 1.5 and Gemini Robotics-ER 1.5 – were introduced yesterday (25 September) in a blogpost where DeepMind senior director and head of robotics Carolina Parada described their functionalities.

Gemini Robotics 1.5 is a vision-language-action (VLA) model that turns visual information and instructions into motor commands for a robot to perform a task, while Gemini Robotics-ER 1.5 is a vision-language model (VLM) that specialises in understanding physical spaces and can create multi-step processes to complete a task. The VLM model can also natively call tools such as Google Search to look for information or use any third-party user-defined functions.

The Gemini Robotics-ER 1.5 model is now available to developers through the Gemini API in Google AI Studio, while the Gemini Robotics 1.5 model is currently available to select partners.

The two models are designed to work together to ensure a robot can complete an objective with multiple parameters or steps.

The VLM model basically acts as the orchestrator for the robot, giving the VLA model natural language instructions. The VLA model then uses its vision and language understanding to directly perform the specific actions and adapt to environmental parameters if necessary.

“Both of these models are built on the core Gemini family of models and have been fine-tuned with different datasets to specialise in their respective roles,” said Parada. “When combined, they increase the robot’s ability to generalise to longer tasks and more diverse environments.”

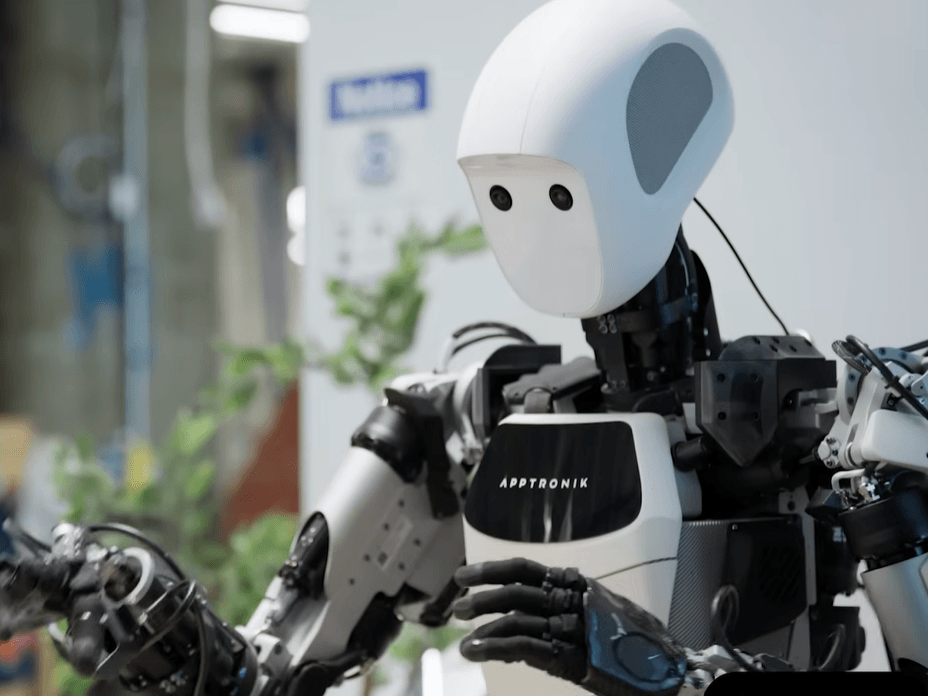

The DeepMind team demonstrated the models’ capabilities in a YouTube video by instructing a robot to sort laundry into different bins according to colour, with the robot separating white clothes from coloured clothes and placing the clothes into the allocated bin.

A major talking point of the VLA model is its ability to learn across different “embodiments”. According to Parada, the model can transfer motions learned from one robot to another, without needing to specialise the model to each new embodiment.

“This breakthrough accelerates learning new behaviours, helping robots become smarter and more useful,” she said.

Parada claimed that the release of Gemini Robotics 1.5 marks an “important milestone” towards artificial general intelligence – also referred to as human‑level intelligence AI – in the physical world.

“By introducing agentic capabilities, we’re moving beyond models that react to commands and creating systems that can truly reason, plan, actively use tools and generalise,” she said.

“This is a foundational step toward building robots that can navigate the complexities of the physical world with intelligence and dexterity, and ultimately, become more helpful and integrated into our lives.”

Google DeepMind first revealed its robotics projects last year, and has been steadily revealing new milestones in the time since.

In March, the company first unveiled its Gemini Robotics project. At the time of the announcement, the company wrote about its belief that AI models for robotics need three principal qualities: they have to be general (meaning adaptive), interactive and dexterous.

Don’t miss out on the knowledge you need to succeed. Sign up for the Daily Brief, Silicon Republic’s digest of need-to-know sci-tech news.