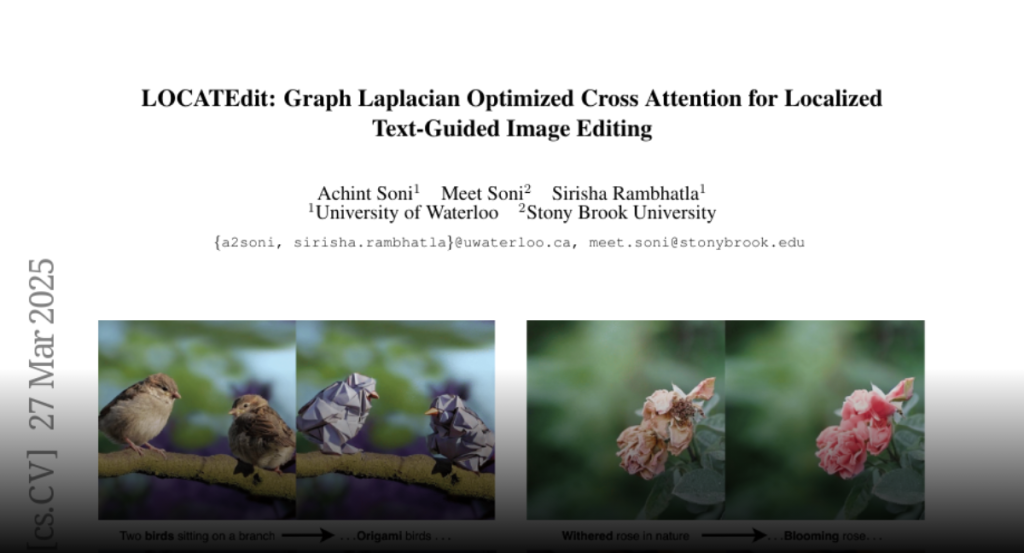

Text-guided image editing aims to modify specific regions of an image

according to natural language instructions while maintaining the general

structure and the background fidelity. Existing methods utilize masks derived

from cross-attention maps generated from diffusion models to identify the

target regions for modification. However, since cross-attention mechanisms

focus on semantic relevance, they struggle to maintain the image integrity. As

a result, these methods often lack spatial consistency, leading to editing

artifacts and distortions. In this work, we address these limitations and

introduce LOCATEdit, which enhances cross-attention maps through a graph-based

approach utilizing self-attention-derived patch relationships to maintain

smooth, coherent attention across image regions, ensuring that alterations are

limited to the designated items while retaining the surrounding structure.

\method consistently and substantially outperforms existing baselines on

PIE-Bench, demonstrating its state-of-the-art performance and effectiveness on

various editing tasks. Code can be found on

https://github.com/LOCATEdit/LOCATEdit/