This post is co-written by Mark Warner, Principal Solutions Architect for Thales, Cyber Security Products.

As generative AI applications make their way into production environments, they integrate with a wider range of business systems that process sensitive customer data. This integration introduces new challenges around protecting personally identifiable information (PII) while maintaining the ability to recover original data when legitimately needed by downstream applications. Consider a financial services company implementing generative AI across different departments. The customer service team needs an AI assistant that can access customer profiles and provide personalized responses that include contact information, for example: “We’ll send your new card to your address at 123 Main Street.” Meanwhile, the fraud analysis team requires the same customer data but must analyze patterns without exposing actual PII, working only with protected representations of sensitive information.

Amazon Bedrock Guardrails helps detect sensitive information, such as PII, in standard format in input prompts or model responses. Sensitive information filters give organizations control over how sensitive data is handled, with options to block requests containing PII or mask the sensitive information with generic placeholders like {NAME} or {EMAIL}. This capability helps organizations comply with data protection regulations while still using the power of large language models (LLMs).

Although masking effectively protects sensitive information, it creates a new challenge: the loss of data reversibility. When guardrails replace sensitive data with generic masks, the original information becomes inaccessible to downstream applications that might need it for legitimate business processes. This limitation can impact workflows where both security and functional data are required.

Tokenization offers a complementary approach to this challenge. Unlike masking, tokenization replaces sensitive data with format-preserving tokens that are mathematically unrelated to the original information but maintain its structure and usability. These tokens can be securely reversed back to their original values when needed by authorized systems, creating a path for secure data flows throughout an organization’s environment.

In this post, we show you how to integrate Amazon Bedrock Guardrails with third-party tokenization services to protect sensitive data while maintaining data reversibility. By combining these technologies, organizations can implement stronger privacy controls while preserving the functionality of their generative AI applications and related systems. The solution described in this post demonstrates how to combine Amazon Bedrock Guardrails with tokenization services from Thales CipherTrust Data Security Platform to create an architecture that protects sensitive data without sacrificing the ability to process that data securely when needed. This approach is particularly valuable for organizations in highly regulated industries that need to balance innovation with compliance requirements.

Amazon Bedrock Guardrails APIs

This section describes the key components and workflow for the integration between Amazon Bedrock Guardrails and a third-party tokenization service.

Amazon Bedrock Guardrails provides two distinct approaches for implementing content safety controls:

Direct integration with model invocation through APIs like InvokeModel and Converse, where guardrails automatically evaluate inputs and outputs as part of the model inference process.

Standalone evaluation through the ApplyGuardrail API, which decouples guardrails assessment from model invocation, allowing evaluation of text against defined policies.

This post uses the ApplyGuardrail API for tokenization integration because it separates content assessment from model invocation, allowing for the insertion of tokenization processing between these steps. This separation creates the necessary space in the workflow to replace guardrail masks with format-preserving tokens before model invocation, or after the model response is handed over to the target application downstream in the process.

The solution extends the typical ApplyGuardrail API implementation by inserting tokenization processing between guardrail evaluation and model invocation, as follows:

The application calls the ApplyGuardrail API to assess the user input for sensitive information.

If no sensitive information is detected (action = “NONE”), the application proceeds to model invocation via the InvokeModel API.

If sensitive information is detected (action = “ANONYMIZED”):

The application captures the detected PII and its positions.

It calls a tokenization service to convert these entities into format-preserving tokens.

It replaces the generic guardrail masks with these tokens.

The application then invokes the foundation model with the tokenized content.

For model responses:

The application applies guardrails to check the output from the model for sensitive information.

It tokenizes detected PII before passing the response to downstream systems.

Solution overview

To illustrate how this workflow delivers value in practice, consider a financial advisory application that helps customers understand their spending patterns and receive personalized financial recommendations. In this example, three distinct application components work together to provide secure, AI-powered financial insights:

Customer gateway service – This trusted frontend orchestrator receives customer queries that often contain sensitive information. For example, a customer might ask: “Hi, this is j.smith@example.com. Based on my last five transactions on acme.com, and my current balance of $2,342.18, should I consider their new credit card offer?”

Financial analysis engine – This AI-powered component analyzes financial patterns and generates recommendations but doesn’t need access to actual customer PII. It works with anonymized or tokenized information.

Response processing service – This trusted service handles the final customer communication, including detokenizing sensitive information before presenting results to the customer.

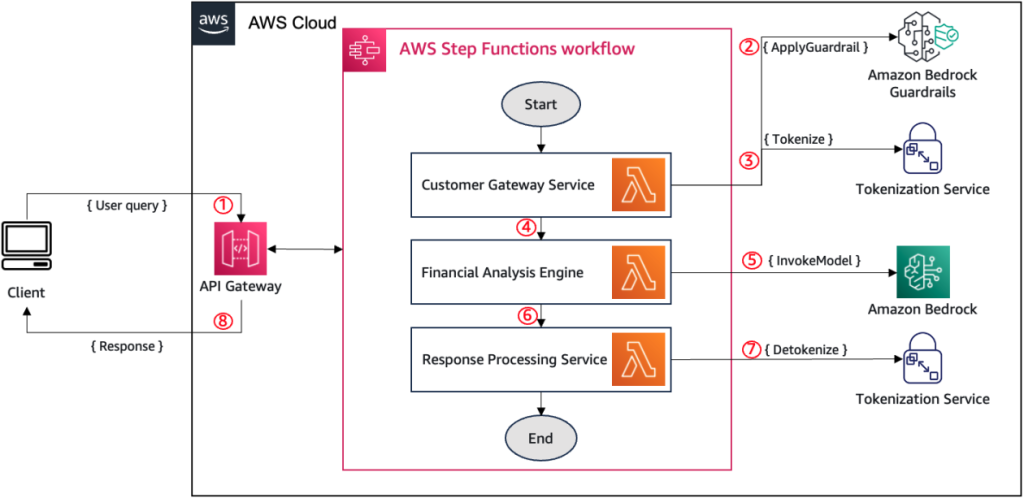

The following diagram illustrates the workflow for integrating Amazon Bedrock Guardrails with tokenization services in this financial advisory application. AWS Step Functions orchestrates the sequential process of PII detection, tokenization, AI model invocation, and detokenization across the three key components (customer gateway service, financial analysis engine, and response processing service) using AWS Lambda functions.

The workflow operates as follows:

The customer gateway service (for this example, through Amazon API Gateway) receives the user input containing sensitive information.

It calls the ApplyGuardrail API to identify PII or other sensitive information that should be anonymized or blocked.

For detected sensitive elements (such as user names or merchant names), it calls the tokenization service to generate format-preserving tokens.

The input with tokenized values is passed to the financial analysis engine for processing. (For example, “Hi, this is [[TOKEN_123]]. Based on my last five transactions on [[TOKEN_456]] and my current balance of $2,342.18, should I consider their new credit card offer?”)

The financial analysis engine invokes an LLM on Amazon Bedrock to generate financial advice using the tokenized data.

The model response, potentially containing tokenized values, is sent to the response processing service.

This service calls the tokenization service to detokenize the tokens, restoring the original sensitive values.

The final, detokenized response is delivered to the customer.

This architecture maintains data confidentiality throughout the processing flow while preserving the information’s utility. The financial analysis engine works with structurally valid but cryptographically protected data, allowing it to generate meaningful recommendations without exposing sensitive customer information. Meanwhile, the trusted components at the entry and exit points of the workflow can access the actual data when necessary, creating a secure end-to-end solution.

In the following sections, we provide a detailed walkthrough of implementing the integration between Amazon Bedrock Guardrails and tokenization services.

Prerequisites

To implement the solution described in this post, you must have the following components configured in your environment:

An AWS account with Amazon Bedrock enabled in your target AWS Region.

Appropriate AWS Identity and Access Management (IAM) permissions configured following least privilege principles with specific actions enabled: bedrock:CreateGuardrail, bedrock:ApplyGuardrail, and bedrock-runtime:InvokeModel.

For AWS Organizations, verify Amazon Bedrock access is permitted by service control policies.

A Python 3.7+ environment with the boto3 library installed. For information about installing the boto3 library, refer to AWS SDK for Python (Boto3).

AWS credentials configured for programmatic access using the AWS Command Line Interface (AWS CLI). For more details, refer to Configuring settings for the AWS CLI.

This implementation requires a deployed tokenization service accessible through REST API endpoints. Although this walkthrough demonstrates integration with Thales CipherTrust, the pattern adapts to tokenization providers offering protect and unprotect API operations. Make sure network connectivity exists between your application environment and both AWS APIs and your tokenization service endpoints, along with valid authentication credentials for accessing your chosen tokenization service. For information about setting up Thales CipherTrust specifically, refer to How Thales Enables PCI DSS Compliance with a Tokenization Solution on AWS.

Configure Amazon Bedrock Guardrails

Configure Amazon Bedrock Guardrails for PII detection and masking through the Amazon Bedrock console or programmatically using the AWS SDK. Sensitive information filter policies can anonymize or redact information from model requests or responses:

Integrate the tokenization workflow

This section implements the tokenization workflow by first detecting PII entities with the ApplyGuardrail API, then replacing the generic masks with format-preserving tokens from your tokenization service.

Apply guardrails to detect PII entities

Use the ApplyGuardrail API to validate input text from the user and detect PII entities:

Invoke tokenization service

The response from the ApplyGuadrail API includes the list of PII entities matching the sensitive information policy. Parse those entities and invoke the tokenization service to generate the tokens.

The following example code uses the Thales CipherTrust tokenization service:

Replace guardrail masks with tokens

Next, substitute the generic guardrail masks with the tokens generated by the Thales CipherTrust tokenization service. This enables downstream applications to work with structurally valid data while maintaining security and reversibility.

The result of this process transforms user inputs containing information that match the sensitive information policy applied using Amazon Bedrock Guardrails into unique and reversible tokenized versions.

The following example input contains PII elements:

The following is an example of the sanitized user input:

Downstream application processing

The sanitized input is ready to be used by generative AI applications, including model invocations on Amazon Bedrock. In response to the tokenized input, an LLM invoked by the financial analysis engine would produce a relevant analysis that maintains the secure token format:

When authorized systems need to recover original values, tokens are detokenized. With Thales CipherTrust, this is accomplished using the Detokenize API, which requires the same parameters as in the previous tokenize action. This completes the secure data flow while preserving the ability to recover original information when needed.

Clean up

As you follow the approach described in this post, you will create new AWS resources in your account. To avoid incurring additional charges, delete these resources when you no longer need them.

To clean up your resources, complete the following steps:

Delete the guardrails you created. For instructions, refer to Delete your guardrail.

If you implemented the tokenization workflow using Lambda, API Gateway, or Step Functions as described in this post, remove the resources you created.

This post assumes a tokenization solution is already available in your account. If you deployed a third-party tokenization solution (such as Thales CipherTrust) to test this implementation, refer to that solution’s documentation for instructions to properly decommission these resources and stop incurring charges.

Conclusion

This post demonstrated how to combine Amazon Bedrock Guardrails with tokenization to enhance handling of sensitive information in generative AI workflows. By integrating these technologies, organizations can protect PII during processing while maintaining data utility and reversibility for authorized downstream applications.

The implementation illustrated uses Thales CipherTrust Data Security Platform for tokenization, but the architecture supports many tokenization solutions. To learn more about a serverless approach to building custom tokenization capabilities, refer to Building a serverless tokenization solution to mask sensitive data.

This solution provides a practical framework for builders to use the full potential of generative AI with appropriate safeguards. By combining the content safety mechanisms of Amazon Bedrock Guardrails with the data reversibility of tokenization, you can implement responsible AI workflows that align with your application requirements and organizational policies while preserving the functionality needed for downstream systems.

To learn more about implementing responsible AI practices on AWS, see Transform responsible AI from theory into practice.

About the Authors

Nizar Kheir is a Senior Solutions Architect at AWS with more than 15 years of experience spanning various industry segments. He currently works with public sector customers in France and across EMEA to help them modernize their IT infrastructure and foster innovation by harnessing the power of the AWS Cloud.

Nizar Kheir is a Senior Solutions Architect at AWS with more than 15 years of experience spanning various industry segments. He currently works with public sector customers in France and across EMEA to help them modernize their IT infrastructure and foster innovation by harnessing the power of the AWS Cloud.

Mark Warner is a Principal Solutions Architect for Thales, Cyber Security Products division. He works with companies in various industries such as finance, healthcare, and insurance to improve their security architectures. His focus is assisting organizations with reducing risk, increasing compliance, and streamlining data security operations to reduce the probability of a breach.

Mark Warner is a Principal Solutions Architect for Thales, Cyber Security Products division. He works with companies in various industries such as finance, healthcare, and insurance to improve their security architectures. His focus is assisting organizations with reducing risk, increasing compliance, and streamlining data security operations to reduce the probability of a breach.