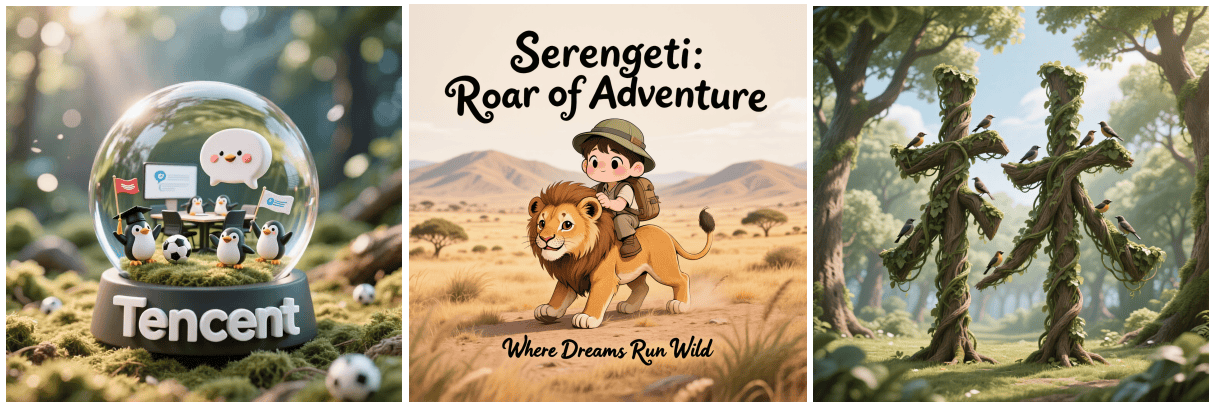

On the night of September 9, Tencent released and open-sourced the latest image model “Hunyuan Image 2.1”. This model boasts industry-leading capabilities and supports native 2K high-definition images.

After being open-sourced, the Hunyuan Image 2.1 model quickly climbed the Hugging Face model popularity chart, becoming the third most popular model globally. Among the top eight models on the list, Tencent’s Hunyuan model family occupies three positions.

At the same time, the Tencent Hunyuan team revealed that they will soon release a native multimodal image generation model.

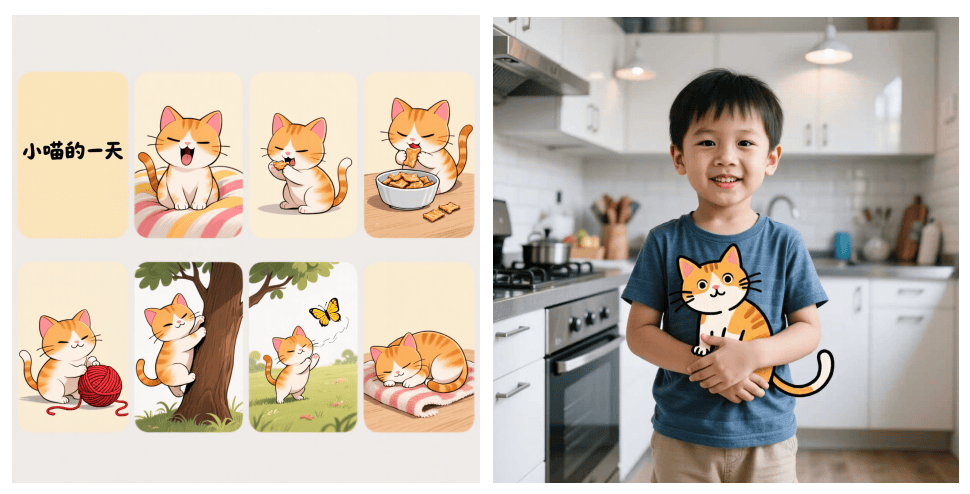

Hunyuan Image 2.1 is a comprehensive upgrade from the 2.0 architecture, placing greater emphasis on balancing generation quality and performance. The new version not only supports native input in both Chinese and English but also enables high-quality generation of text with complex semantics in both languages. Additionally, there have been significant improvements in the overall aesthetic performance of generated images and the diversity of applicable scenarios.

This means that designers, illustrators, and other visual creators can more efficiently and conveniently translate their ideas into visuals. Whether generating high-fidelity creative illustrations, creating posters and packaging designs with Chinese and English slogans, or producing complex four-panel comics and graphic novels, Hunyuan Image 2.1 can provide creators with fast, high-quality support.

Hunyuan Image 2.1 is a fully open-source base model that not only achieves industry-leading generation results but can also flexibly adapt to the diverse derivative needs of the community. Currently, the model weights and code for Hunyuan Image 2.1 have been officially released in open-source communities such as Hugging Face and GitHub, allowing both individual and enterprise developers to conduct research or develop various derivative models and plugins based on this foundational model.

Thanks to a larger-scale image-text alignment dataset, Hunyuan Image 2.1 has significantly improved in complex semantic understanding and cross-domain generalization capabilities. It supports prompts of up to 1000 tokens, allowing for precise generation of scene details, character expressions, and actions, enabling separate deion and control of multiple objects. Furthermore, Hunyuan Image 2.1 can finely control text within images, allowing for a natural integration of textual information with visuals.

(Highlight 1: Hunyuan Image 2.1 demonstrates strong understanding of complex semantics, supporting separate deion and precise generation of multiple subjects.)

(Highlight 2: More stable control over text and scene details in images.)

(Highlight 3: Supports a rich variety of styles, such as realistic, comic, and vinyl figures, while possessing high aesthetic quality.)

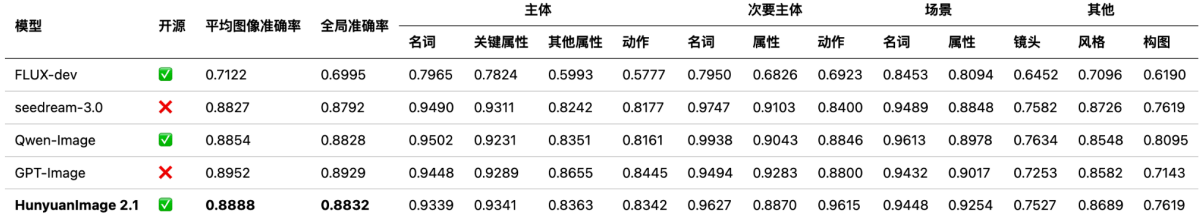

Tencent’s Hunyuan Image Model 2.1 is at the SOTA level among open-source models.

According to the SSAE (Structured Semantic Alignment Evaluation) results, Tencent’s Hunyuan Image Model 2.1 currently achieves the best semantic alignment performance among open-source models, coming very close to the performance of closed-source commercial models (GPT-Image).

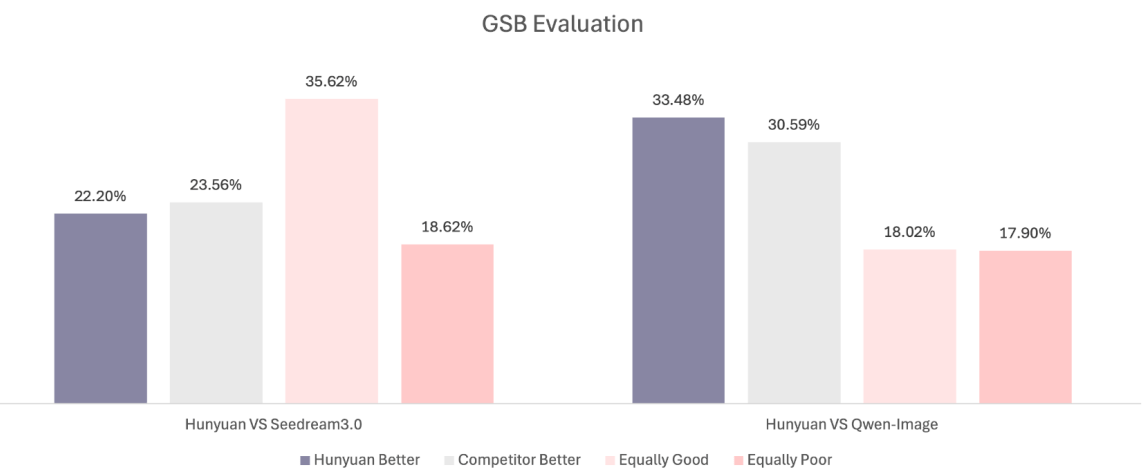

Additionally, GSB (Good Same Bad) evaluation results indicate that Hunyuan Image 2.1’s image generation quality is comparable to that of the closed-source commercial model Seedream 3.0, while being slightly superior to similar open-source models like Qwen-Image.

The Hunyuan Image 2.1 model not only utilizes a vast amount of training data but also employs structured, varying-length, and diverse content captions, greatly enhancing its understanding of textual deions. The caption model incorporates OCR and IP RAG expert models, effectively improving its responsiveness to complex text recognition and world knowledge.

To significantly reduce computational load and enhance training and inference efficiency, the model employs a VAE with a 32-fold ultra-high compression ratio and utilizes dinov2 alignment and repa loss to ease training difficulties. As a result, the model can efficiently generate native 2K images.

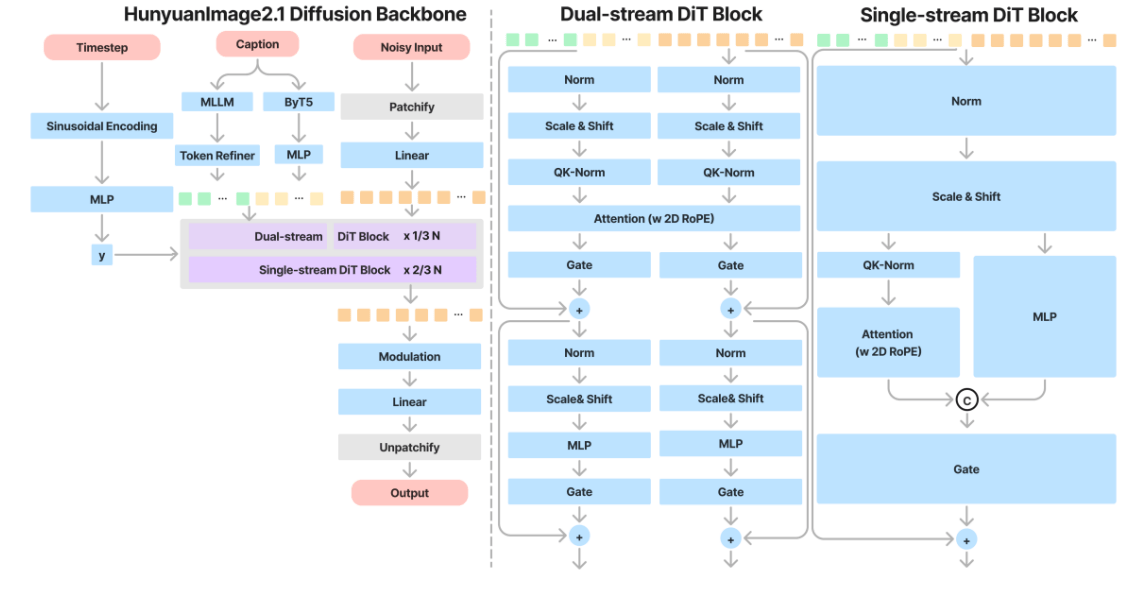

In terms of text encoding, Hunyuan Image 2.1 is equipped with dual text encoders: one MLLM module to further enhance image-text alignment capabilities, and another ByT5 model to boost text generation expressiveness. The overall architecture consists of a single/dual-stream DiT model with 17 billion parameters.

Moreover, Hunyuan Image 2.1 addresses the training stability issues of the average flow model (meanflow) at the 17 billion parameter level, reducing the model’s inference steps from 100 to 8, significantly improving inference speed while maintaining the original performance of the model.

The concurrently open-sourced Hunyuan text rewriting model (PromptEnhancer) is the industry’s first systematic, industrial-grade Chinese and English rewriting model, capable of structurally optimizing user text commands to enrich visual expression, thereby greatly enhancing the semantic performance of the images generated from the rewritten text.

Tencent Hunyuan continues to deepen its efforts in the field of image generation, having previously released the first open-source Chinese native DiT architecture large image model—Hunyuan DiT, as well as the industry’s first commercial-grade real-time image model—Hunyuan Image 2.0. The newly launched native 2K model, Hunyuan Image 2.1, achieves a better balance between quality and performance, meeting various needs of users and enterprises in diverse visual scenarios.

At the same time, Tencent Hunyuan is firmly embracing open-source, continuously releasing various sizes of language models, comprehensive multimodal generation capabilities and toolset plugins for images, videos, and 3D, providing open-source foundations that approach commercial model performance. The total number of derivative models for images and videos has reached 3,000, and downloads of the Hunyuan 3D series models in the community have exceeded 2.3 million, making it the most popular 3D open-source model globally.返回搜狐,查看更多