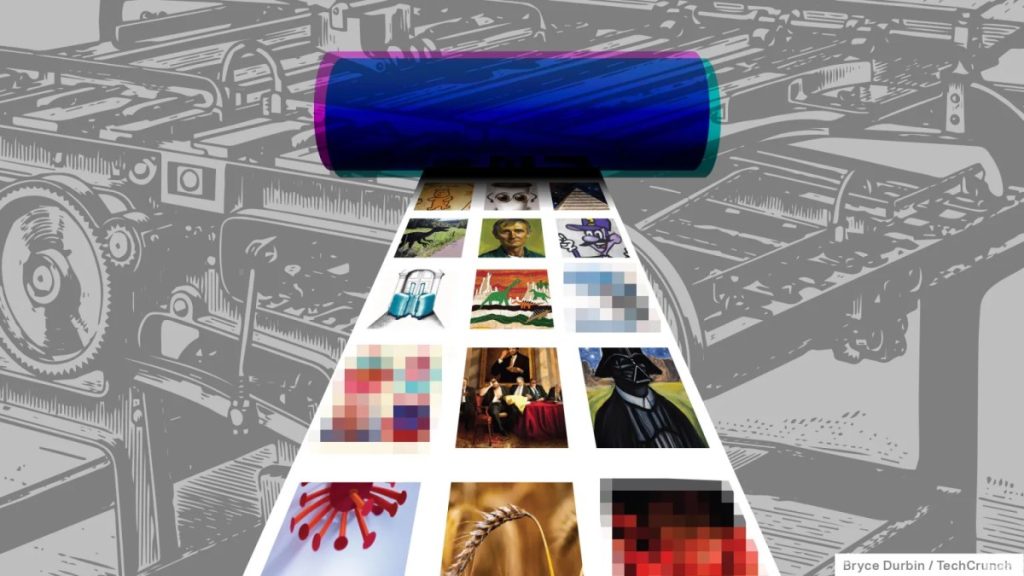

Following a string of controversies stemming from technical hiccups and licensing changes, AI startup Stability AI has announced its latest family of image-generation models.

The new Stable Diffusion 3.5 series is more customizable and versatile than Stability’s previous-generation tech, the company claims — as well as more performant. There are three models in total:

Stable Diffusion 3.5 Large: With 8 billion parameters, it’s the most powerful model, capable of generating images at resolutions up to 1 megapixel. (Parameters roughly correspond to a model’s problem-solving skills, and models with more parameters generally perform better than those with fewer.)

Stable Diffusion 3.5 Large Turbo: A distilled version of Stable Diffusion 3.5 Large that generates images more quickly, at the cost of some quality.

Stable Diffusion 3.5 Medium: A model optimized to run on edge devices like smartphones and laptops, capable of generating images ranging from 0.25 to 2 megapixel resolutions.

While Stable Diffusion 3.5 Large and 3.5 Large Turbo are available today, 3.5 Medium won’t be released until October 29.

Stability says that the Stable Diffusion 3.5 models should generate more “diverse” outputs — that is to say, images depicting people with different skin tones and features — without the need for “extensive” prompting.

“During training, each image is captioned with multiple versions of prompts, with shorter prompts prioritized,” Hanno Basse, Stability’s chief technology officer, told TechCrunch in an interview. “This ensures a broader and more diverse distribution of image concepts for any given text description. Like most generative AI companies, we train on a wide variety of data, including filtered publicly available datasets and synthetic data.”

Some companies have cludgily built these sorts of “diversifying” features into image generators in the past, prompting outcries on social media. An older version of Google’s Gemini chatbot, for example, would show an anachronistic group of figures for historical prompts such as “a Roman legion” or “U.S. senators.” Google was forced to pause image generation of people for nearly six months while it developed a fix.

Techcrunch event

San Francisco

|

October 27-29, 2025

With any luck, Stability’s approach will be more thoughtful than others. We can’t give impressions, unfortunately, as Stability didn’t provide early access.

Stability’s previous flagship image generator, Stable Diffusion 3 Medium, was roundly criticized for its peculiar artifacts and poor adherence to prompts. The company warns that Stable Diffusion 3.5 models might suffer from similar prompting errors; it blames engineering and architectural trade-offs. But Stability also asserts the models are more robust than their predecessors in generating images across a range of different styles, including 3D art.

“Greater variation in outputs from the same prompt with different seeds may occur, which is intentional as it helps preserve a broader knowledge-base and diverse styles in the base models,” Stability wrote in a blog post shared with TechCrunch. “However, as a result, prompts lacking specificity might lead to increased uncertainty in the output, and the aesthetic level may vary.”

One thing that hasn’t changed with the new models is Stability’s licenses.

As with previous Stability models, models in the Stable Diffusion 3.5 series are free to use for “non-commercial” purposes, including research. Businesses with less than $1 million in annual revenue can also commercialize them at no cost. Organizations with more than $1 million in revenue, however, have to contract with Stability for an enterprise license.

Stability caused a stir this summer over its restrictive fine-tuning terms, which gave (or at least appeared to give) the company the right to extract fees for models trained on images from its image generators. In response to the blowback, the company adjusted its terms to allow for more liberal commercial use. Stability reaffirmed today that users own the media they generate with Stability models.

“We encourage creators to distribute and monetize their work across the entire pipeline,” Ana Guillén, VP of marketing and communications at Stability, said in an emailed statement, “as long as they provide a copy of our community license to the users of those creations and prominently display ‘Powered by Stability AI’ on related websites, user interfaces, blog posts, About pages, or product documentation.”

Stable Diffusion 3.5 Large and Diffusion 3.5 Large Turbo can be self-hosted or used via Stability’s API and third-party platforms including Hugging Face, Fireworks, Replicate, and ComfyUI. Stability says that it plans to release the ControlNets for the models, which allow for fine-tuning, in the next few days.

Stability’s models, like most AI models, are trained on public web data — some of which may be copyrighted or under a restrictive license. Stability and many other AI vendors argue that the fair-use doctrine shields them from copyright claims. But that hasn’t stopped data owners from filing a growing number of class action lawsuits.

Stability leaves it to customers to defend themselves against copyright claims, and, unlike some other vendors, has no payout carve-out in the event that it’s found liable.

Stability does allow data owners to request that their data be removed from its training datasets, however. As of March 2023, artists had removed 80 million images from Stable Diffusion’s training data, according to the company.

Asked about safety measures around misinformation in light of the upcoming U.S. general elections, Stability said that it “has taken — and continues to take — reasonable steps to prevent the misuse of Stable Diffusion by bad actors.” The startup declined to give specific technical details about those steps, however.

As of March, Stability only prohibited explicitly “misleading” content created using its generative AI tools — not content that could influence elections, hurt election integrity, or that features politicians and public figures.

TechCrunch has an AI-focused newsletter! Sign up here to get it in your inbox every Wednesday.