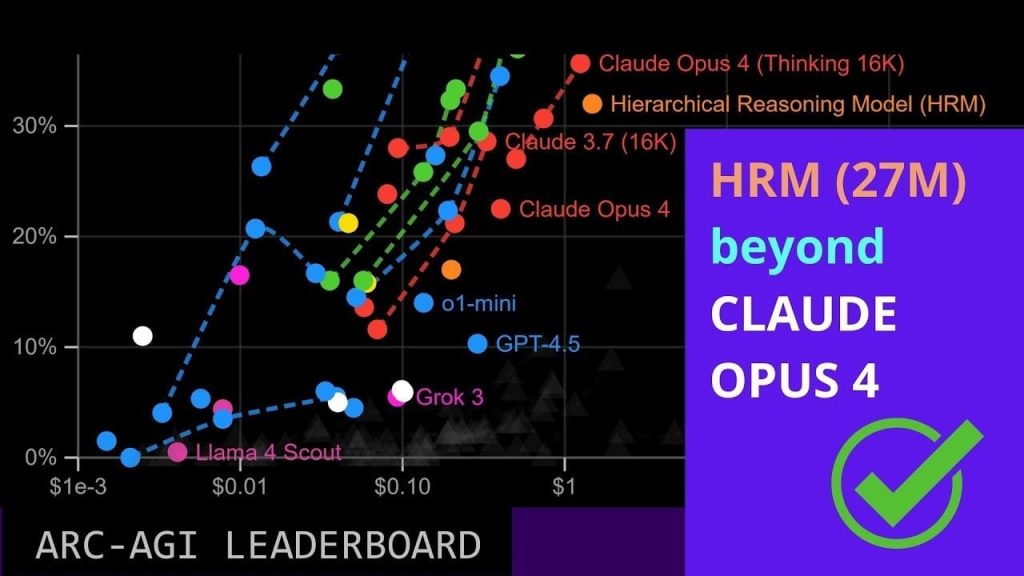

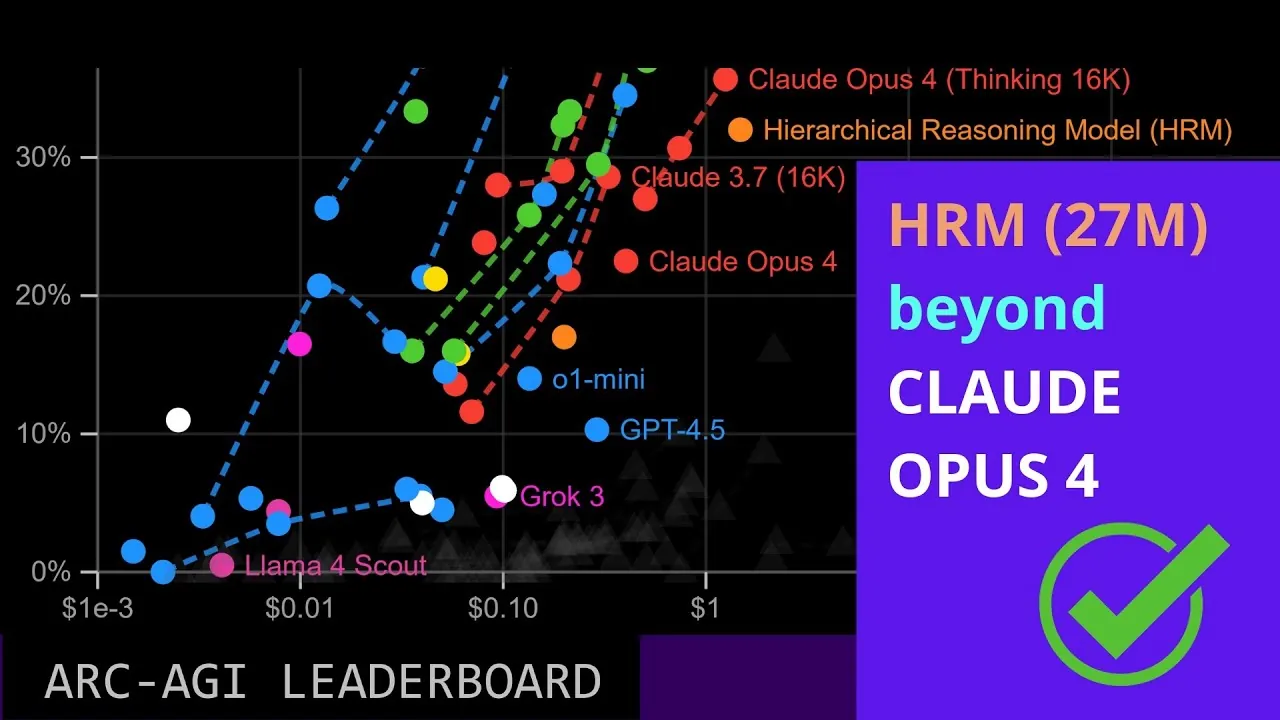

What if the future of artificial intelligence wasn’t about building ever-larger models but instead about doing more with less? In a stunning upset, the 27-million-parameter Hierarchical Reasoning Model (HRM) has outperformed the colossal Claude OPUS 4 on the ARC AGI benchmark, a feat that challenges the long-held belief that size equals superiority in AI. While Claude OPUS 4 features billions of parameters and vast computational power, HRM’s compact architecture and clever design have proven that precision and efficiency can outshine brute force. This breakthrough not only redefines expectations for AI performance but also signals a paradigm shift in how we approach artificial intelligence development.

Discover AI provide more insights into the new features that make HRM a standout in the AI landscape. From its hierarchical reasoning architecture to its innovative use of data augmentation, the model’s success reveals how specialization and efficiency can rival, or even surpass, general-purpose giants. But what does this mean for the future of AI? Could smaller, task-specific systems become the new standard, or does this achievement come with trade-offs? By exploring HRM’s architecture, performance, and implications, we uncover how this tiny model is reshaping the rules of the game, and what it might mean for the next chapter of artificial intelligence.

HRM Outperforms Larger AI Models

TL;DR Key Takeaways :

The 27-million-parameter Hierarchical Reasoning Model (HRM) outperformed the larger Claude OPUS 4 on the ARC AGI benchmark, showcasing the potential of smaller, specialized AI systems.

HRM’s success is attributed to its hierarchical reasoning architecture, which uses iterative refinement loops to optimize performance and computational efficiency.

Strategic data augmentation techniques enable HRM to achieve high performance with minimal resources, requiring as few as 300 samples in some cases.

While HRM excels in narrowly defined tasks, its ability to generalize beyond its training data remains uncertain, highlighting a trade-off between specialization and versatility.

HRM’s compact design and low resource requirements make it a cost-effective and accessible alternative to larger models, paving the way for provide widespread access tod AI applications.

HRM’s Performance: A Paradigm Shift in AI Expectations

Despite its compact size, HRM has demonstrated remarkable capabilities by surpassing Claude OPUS 4 on the ARC AGI benchmark. While Claude OPUS 4 features significantly greater computational capacity, HRM’s performance challenges the prevailing notion that larger models are inherently superior. Independent evaluations have confirmed HRM’s consistent edge, with only minor variations attributed to dataset configurations. This result emphasizes how precision-engineered, task-specific models can rival or even outperform their larger, more resource-intensive counterparts. By focusing on efficiency and specialization, HRM redefines what is possible in AI performance.

Hierarchical Reasoning: The Core of HRM’s Success

The foundation of HRM’s success lies in its hierarchical reasoning architecture. This multi-layered design incorporates inner and outer refinement loops to enhance its reasoning capabilities. The outer refinement loop, in particular, plays a critical role by iteratively improving the model’s outputs, allowing it to handle complex reasoning tasks with exceptional accuracy and efficiency. By structuring its reasoning processes hierarchically, HRM optimizes computational resources, delivering high performance in narrowly defined domains. This innovative approach demonstrates the potential of hierarchical reasoning to achieve superior results without relying on massive computational power.

HRM Outshines Claude OPUS 4 with Fewer Parameters

Check out more relevant guides from our extensive collection on small AI models that you might find useful.

Data Augmentation: Maximizing Efficiency with Minimal Resources

A key factor in HRM’s efficiency is its strategic use of data augmentation. The model employs task-specific techniques, such as rotation, flipping, and recoloring, to enhance its training process. Remarkably, HRM achieves near-maximum performance with minimal augmentation, requiring as few as 300 samples in some cases. This approach reduces the need for extensive datasets, accelerates training, and positions HRM as a cost-effective solution for specialized tasks. By doing more with less, HRM exemplifies how targeted strategies can overcome resource limitations while maintaining high performance.

Specialization and Its Trade-Offs

HRM’s design prioritizes specialization, allowing it to excel in narrowly defined tasks. However, its ability to generalize beyond its training data remains an open question. While its performance on the ARC AGI benchmark highlights its effectiveness in specific domains, further research is needed to evaluate its adaptability to broader applications. This trade-off between specialization and generalization reflects a broader challenge in AI: balancing task-specific performance with versatility. Understanding and addressing this balance will be crucial for the future development of AI systems like HRM.

Efficiency and Accessibility: A New Standard

One of HRM’s standout features is its efficiency. Its compact architecture and streamlined training process make it ideal for deployment on low-resource hardware, such as laptops or edge devices. In contrast to large models like GPT-4 or Claude OPUS 4, which demand substantial computational resources, HRM offers a cost-effective alternative. By delivering high performance at a fraction of the cost, HRM paves the way for more accessible AI technologies. This accessibility could provide widespread access to AI applications, making advanced capabilities available to a wider range of users and industries.

Future Research Opportunities

The success of HRM opens several promising avenues for further exploration. Key areas of focus include:

Investigating the generalizability of HRM’s refinement mechanisms across diverse tasks and domains.

Enhancing data augmentation techniques to improve performance in a broader range of applications.

Exploring HRM’s potential in specialized fields, such as biophysics, molecular design, and other domain-specific challenges.

These research directions could refine HRM’s capabilities and expand its applicability, solidifying its role as a versatile tool for solving specialized problems. By addressing these areas, researchers can unlock new possibilities for HRM and similar models.

Implications for the Future of AI

The emergence of HRM as a high-performing, small-scale AI model has significant implications for the AI landscape. By demonstrating that compact, task-specific systems can rival or surpass larger, general-purpose models, HRM challenges the dominance of resource-intensive AI architectures. This shift toward smaller, more efficient models could transform industries requiring domain-specific expertise, offering tailored solutions at a fraction of the computational cost. As AI continues to evolve, HRM’s success highlights the potential for innovative, resource-conscious approaches to redefine the boundaries of what AI can achieve.

Media Credit: Discover AI

Filed Under: AI, Top News

Latest Geeky Gadgets Deals

Disclosure: Some of our articles include affiliate links. If you buy something through one of these links, Geeky Gadgets may earn an affiliate commission. Learn about our Disclosure Policy.