Want smarter insights in your inbox? Sign up for our weekly newsletters to get only what matters to enterprise AI, data, and security leaders. Subscribe Now

Consider maintaining and developing an e-commerce platform that processes millions of transactions every minute, generating large amounts of telemetry data, including metrics, logs and traces across multiple microservices. When critical incidents occur, on-call engineers face the daunting task of sifting through an ocean of data to unravel relevant signals and insights. This is equivalent to searching for a needle in a haystack.

This makes observability a source of frustration rather than insight. To alleviate this major pain point, I started exploring a solution to utilize the Model Context Protocol (MCP) to add context and draw inferences from the logs and distributed traces. In this article, I’ll outline my experience building an AI-powered observability platform, explain the system architecture and share actionable insights learned along the way.

Why is observability challenging?

In modern software systems, observability is not a luxury; it’s a basic necessity. The ability to measure and understand system behavior is foundational to reliability, performance and user trust. As the saying goes, “What you cannot measure, you cannot improve.”

Yet, achieving observability in today’s cloud-native, microservice-based architectures is more difficult than ever. A single user request may traverse dozens of microservices, each emitting logs, metrics and traces. The result is an abundance of telemetry data:

AI Scaling Hits Its Limits

Power caps, rising token costs, and inference delays are reshaping enterprise AI. Join our exclusive salon to discover how top teams are:

Turning energy into a strategic advantage

Architecting efficient inference for real throughput gains

Unlocking competitive ROI with sustainable AI systems

Secure your spot to stay ahead: https://bit.ly/4mwGngO

Tens of terabytes of logs per day

Tens of millions of metric data points and pre-aggregates

Millions of distributed traces

Thousands of correlation IDs generated every minute

The challenge is not only the data volume, but the data fragmentation. According to New Relic’s 2023 Observability Forecast Report, 50% of organizations report siloed telemetry data, with only 33% achieving a unified view across metrics, logs and traces.

Logs tell one part of the story, metrics another, traces yet another. Without a consistent thread of context, engineers are forced into manual correlation, relying on intuition, tribal knowledge and tedious detective work during incidents.

Because of this complexity, I started to wonder: How can AI help us get past fragmented data and offer comprehensive, useful insights? Specifically, can we make telemetry data intrinsically more meaningful and accessible for both humans and machines using a structured protocol such as MCP? This project’s foundation was shaped by that central question.

Understanding MCP: A data pipeline perspective

Anthropic defines MCP as an open standard that allows developers to create a secure two-way connection between data sources and AI tools. This structured data pipeline includes:

Contextual ETL for AI: Standardizing context extraction from multiple data sources.

Structured query interface: Allows AI queries to access data layers that are transparent and easily understandable.

Semantic data enrichment: Embeds meaningful context directly into telemetry signals.

This has the potential to shift platform observability away from reactive problem solving and toward proactive insights.

System architecture and data flow

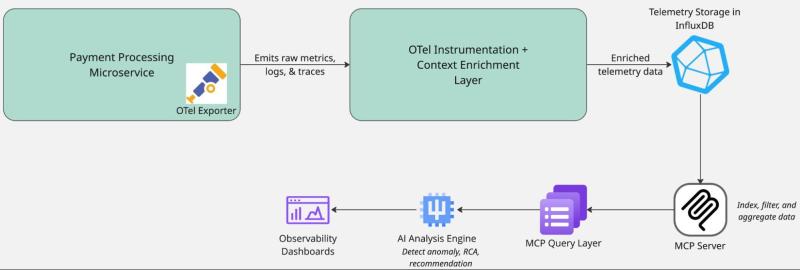

Before diving into the implementation details, let’s walk through the system architecture.

In the first layer, we develop the contextual telemetry data by embedding standardized metadata in the telemetry signals, such as distributed traces, logs and metrics. Then, in the second layer, enriched data is fed into the MCP server to index, add structure and provide client access to context-enriched data using APIs. Finally, the AI-driven analysis engine utilizes the structured and enriched telemetry data for anomaly detection, correlation and root-cause analysis to troubleshoot application issues.

This layered design ensures that AI and engineering teams receive context-driven, actionable insights from telemetry data.

Implementative deep dive: A three-layer system

Let’s explore the actual implementation of our MCP-powered observability platform, focusing on the data flows and transformations at each step.

Layer 1: Context-enriched data generation

First, we need to ensure our telemetry data contains enough context for meaningful analysis. The core insight is that data correlation needs to happen at creation time, not analysis time.

“””Simulate a checkout process with context-enriched telemetry.”””

# Generate correlation id

order_id = f”order-{uuid.uuid4().hex[:8]}”

request_id = f”req-{uuid.uuid4().hex[:8]}”

# Initialize context dictionary that will be applied

context = {

“user_id”: user_id,

“order_id”: order_id,

“request_id”: request_id,

“cart_item_count”: len(cart_items),

“payment_method”: payment_method,

“service_name”: “checkout”,

“service_version”: “v1.0.0”

}

# Start OTel trace with the same context

with tracer.start_as_current_span(

“process_checkout”,

attributes={k: str(v) for k, v in context.items()}

) as checkout_span:

# Logging using same context

logger.info(f”Starting checkout process”, extra={“context”: json.dumps(context)})

# Context Propagation

with tracer.start_as_current_span(“process_payment”):

# Process payment logic…

logger.info(“Payment processed”, extra={“context”:

json.dumps(context)})

Code 1. Context enrichment for logs and traces

This approach ensures that every telemetry signal (logs, metrics, traces) contains the same core contextual data, solving the correlation problem at the source.

Layer 2: Data access through the MCP server

Next, I built an MCP server that transforms raw telemetry into a queryable API. The core data operations here involve the following:

Indexing: Creating efficient lookups across contextual fields

Filtering: Selecting relevant subsets of telemetry data

Aggregation: Computing statistical measures across time windows

def query_logs(query: LogQuery):

“””Query logs with specific filters”””

results = LOG_DB.copy()

# Apply contextual filters

if query.request_id:

results = [log for log in results if log[“context”].get(“request_id”) == query.request_id]

if query.user_id:

results = [log for log in results if log[“context”].get(“user_id”) == query.user_id]

# Apply time-based filters

if query.time_range:

start_time = datetime.fromisoformat(query.time_range[“start”])

end_time = datetime.fromisoformat(query.time_range[“end”])

results = [log for log in results

if start_time <= datetime.fromisoformat(log[“timestamp”]) <= end_time]

# Sort by timestamp

results = sorted(results, key=lambda x: x[“timestamp”], reverse=True)

return results[:query.limit] if query.limit else results

Code 2. Data transformation using the MCP server

This layer transforms our telemetry from an unstructured data lake into a structured, query-optimized interface that an AI system can efficiently navigate.

Layer 3: AI-driven analysis engine

The final layer is an AI component that consumes data through the MCP interface, performing:

Multi-dimensional analysis: Correlating signals across logs, metrics and traces.

Anomaly detection: Identifying statistical deviations from normal patterns.

Root cause determination: Using contextual clues to isolate likely sources of issues.

“””Analyze telemetry data to determine root cause and recommendations.”””

# Define analysis time window

end_time = datetime.now()

start_time = end_time – timedelta(minutes=timeframe_minutes)

time_range = {“start”: start_time.isoformat(), “end”: end_time.isoformat()}

# Fetch relevant telemetry based on context

logs = self.fetch_logs(request_id=request_id, user_id=user_id, time_range=time_range)

# Extract services mentioned in logs for targeted metric analysis

services = set(log.get(“service”, “unknown”) for log in logs)

# Get metrics for those services

metrics_by_service = {}

for service in services:

for metric_name in [“latency”, “error_rate”, “throughput”]:

metric_data = self.fetch_metrics(service, metric_name, time_range)

# Calculate statistical properties

values = [point[“value”] for point in metric_data[“data_points”]]

metrics_by_service[f”{service}.{metric_name}”] = {

“mean”: statistics.mean(values) if values else 0,

“median”: statistics.median(values) if values else 0,

“stdev”: statistics.stdev(values) if len(values) > 1 else 0,

“min”: min(values) if values else 0,

“max”: max(values) if values else 0

}

# Identify anomalies using z-score

anomalies = []

for metric_name, stats in metrics_by_service.items():

if stats[“stdev”] > 0: # Avoid division by zero

z_score = (stats[“max”] – stats[“mean”]) / stats[“stdev”]

if z_score > 2: # More than 2 standard deviations

anomalies.append({

“metric”: metric_name,

“z_score”: z_score,

“severity”: “high” if z_score > 3 else “medium”

})

return {

“summary”: ai_summary,

“anomalies”: anomalies,

“impacted_services”: list(services),

“recommendation”: ai_recommendation

}

Code 3. Incident analysis, anomaly detection and inferencing method

Impact of MCP-enhanced observability

Integrating MCP with observability platforms could improve the management and comprehension of complex telemetry data. The potential benefits include:

Faster anomaly detection, resulting in reduced minimum time to detect (MTTD) and minimum time to resolve (MTTR).

Easier identification of root causes for issues.

Less noise and fewer unactionable alerts, thus reducing alert fatigue and improving developer productivity.

Fewer interruptions and context switches during incident resolution, resulting in improved operational efficiency for an engineering team.

Actionable insights

Here are some key insights from this project that will help teams with their observability strategy.

Contextual metadata should be embedded early in the telemetry generation process to facilitate downstream correlation.

Structured data interfaces create API-driven, structured query layers to make telemetry more accessible.

Context-aware AI focuses analysis on context-rich data to improve accuracy and relevance.

Context enrichment and AI methods should be refined on a regular basis using practical operational feedback.

Conclusion

The amalgamation of structured data pipelines and AI holds enormous promise for observability. We can transform vast telemetry data into actionable insights by leveraging structured protocols such as MCP and AI-driven analyses, resulting in proactive rather than reactive systems. Lumigo identifies three pillars of observability — logs, metrics, and traces — which are essential. Without integration, engineers are forced to manually correlate disparate data sources, slowing incident response.

How we generate telemetry requires structural changes as well as analytical techniques to extract meaning.

Pronnoy Goswami is an AI and data scientist with more than a decade in the field.