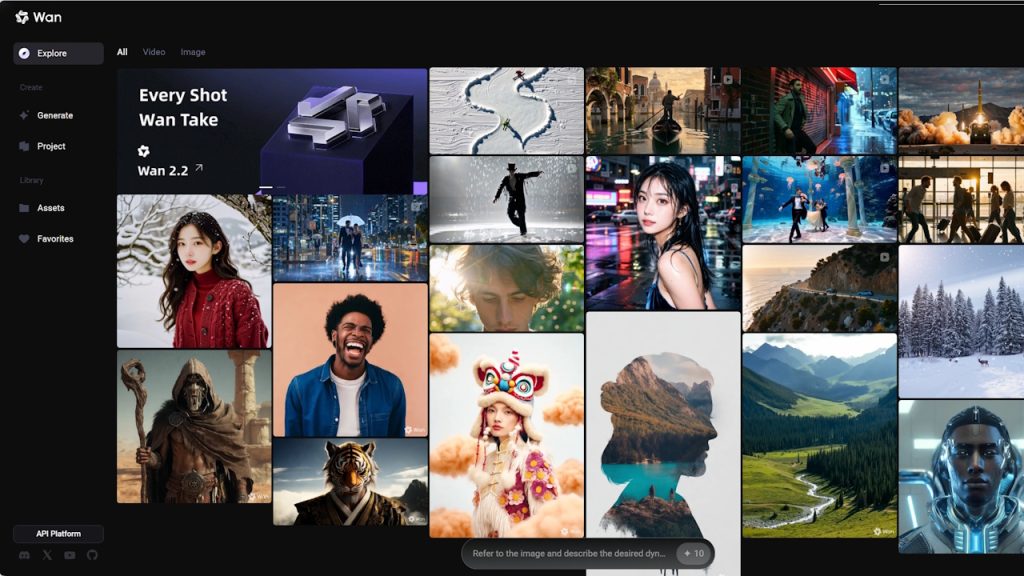

Chinese tech giant Alibaba has released Wan2.2, a major open-source update to its AI video generation models. Unveiled on July 28, the new series directly challenges paid rivals like OpenAI’s Sora and Google’s Veo. It introduces an advanced Mixture-of-Experts (MoE) architecture to improve video quality.

The release also includes a highly efficient 5B model that generates 720p video on consumer-grade GPUs. This move is part of Alibaba’s strategy to lead the open-source AI space by giving powerful, free tools to developers and researchers. It succeeds the company’s Wan2.1 models, which were released earlier this year.

Under the Hood: MoE Architecture and Consumer-Grade HD Video

Wan2.2’s core innovation is the introduction of a Mixture-of-Experts (MoE) architecture into its video diffusion model, a first for the field. This advanced design, widely validated in large language models, allows for a massive increase in the model’s total capacity without a corresponding rise in computational cost during inference. The architecture is specifically tailored to the video generation process, separating the complex denoising task into specialized functions.

The MoE system employs a two-expert design. A “high-noise” expert handles the early stages of generation, focusing on establishing the overall layout and motion of the video. As the process continues, a “low-noise” expert takes over to refine intricate details and enhance visual quality.

According to the project’s technical documentation, this approach increases the model’s total parameter count to 27 billion, but with only 14 billion parameters active at any given step, it maintains the computational footprint of a much smaller model.

To complement this new architecture, Wan2.2 was trained on a significantly expanded and refined dataset, featuring 65.6% more images and 83.2% more videos than its predecessor, Wan2.1. The team placed a heavy emphasis on creating “cinematic-level aesthetics” by using meticulously curated data with detailed labels for lighting, composition, contrast, and color tone.

This allows for more precise and controllable generation, enabling users to create videos with customizable aesthetic preferences, as detailed in the official announcement.

Perhaps the most significant part of the release for accessibility is the new TI2V-5B model, a compact 5-billion-parameter version designed for efficient deployment. This hybrid model natively supports both text-to-video and image-to-video tasks within a single unified framework. Its efficiency is driven by a new high-compression VAE (Variational Autoencoder) that achieves a remarkable compression ratio, making high-definition video generation feasible on non-enterprise hardware.

This breakthrough allows the TI2V-5B model to generate 720p video at 24fps on consumer-grade GPUs like the NVIDIA RTX 4090, requiring less than 24GB of VRAM. This brings advanced AI video tools to a much broader audience of developers, researchers, and creators. To accelerate this adoption, the Wan2.2 models have already been integrated into popular community tools, including ComfyUI and Hugging Face Diffusers.

Alibaba’s decision to release Wan2.2 under a permissive Apache 2.0 license is a direct strategic challenge to the closed, proprietary models that dominate the high end of the market. Companies like OpenAI and Google have kept their most advanced video models, Sora and Veo, behind paywalls and APIs.

By providing a powerful, free alternative, Alibaba is escalating the competition and betting that an open ecosystem will foster faster innovation and wider adoption. This strategy mirrors the disruption seen in AI image generation, where open-source models have become formidable competitors to closed systems.

Part of a Broader AI Ecosystem Offensive

The Wan2.2 launch is not an isolated event. It is the latest move in a rapid-fire series of major AI releases from Alibaba, signaling a comprehensive offensive to establish itself as a leader across multiple AI domains. This flurry of activity demonstrates a clear strategy to build a full suite of open tools for developers.

In the week prior, the company unveiled its new flagship reasoning model, Qwen3-Thinking-2507, which topped key industry benchmarks. It also launched a powerful agentic coding model, Qwen3-Coder, for automating software development tasks.

This strategic pivot was underscored by a statement from Alibaba Cloud, which explained its decision to abandon the “hybrid thinking” mode of earlier models. A spokesperson said, “after discussing with the community and reflecting on the matter, we have decided to abandon the hybrid thinking mode. We will now train the Instruct and Thinking models separately to achieve the best possible quality.”

To showcase the real-world application of its AI, Alibaba also previewed its new “Quark AI” smart glasses. The wearables are powered by the Qwen3 series, a move designed to build market confidence by connecting its software prowess to a tangible consumer product.

Song Gang of Alibaba’s Intelligent Information business group shared his vision for the technology, stating, “ai glasses will become the most important form of wearable intelligence – it will serve as another pair of eyes and ears for humans.”

A Timely Launch Amid Benchmark Skepticism

However, this aggressive push comes at a time of growing industry skepticism about the reliability of AI benchmarks. Just days before the recent Qwen releases, a study alleged that Alibaba’s older Qwen2.5 model had “cheated” on a key math test by memorizing answers from contaminated training data.

The controversy highlights a systemic issue of “teaching to the test” in the race for leaderboard dominance. As AI strategist Nate Jones noted, “the moment we set leaderboard dominance as the goal, we risk creating models that excel in trivial exercises and flounder when facing reality.” This sentiment is echoed by experts like Sara Hooker, Head of Cohere Labs, who argued that “when a leaderboard is important to a whole ecosystem, the incentives are aligned for it to be gamed.”

While Alibaba has moved on to newer models like Qwen3, the allegations cast a shadow over the “benchmark wars” that define AI competition. The Wan2.2 release, with its focus on tangible capabilities and accessibility, may be an attempt to shift the narrative from leaderboard scores to real-world utility and open innovation.