In a recent breakthrough at MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL), researchers have found a way to essentially teach robots about their own bodies, using sight as their guide. The key innovation here is a system known as Neural Jacobian Fields (NJF), which allows a robot to understand and control itself without a need for built-in sensors or complex hand-coded models. According to MIT News, the NJF system is based purely on visual data collected by cameras to determine how to maneuver.

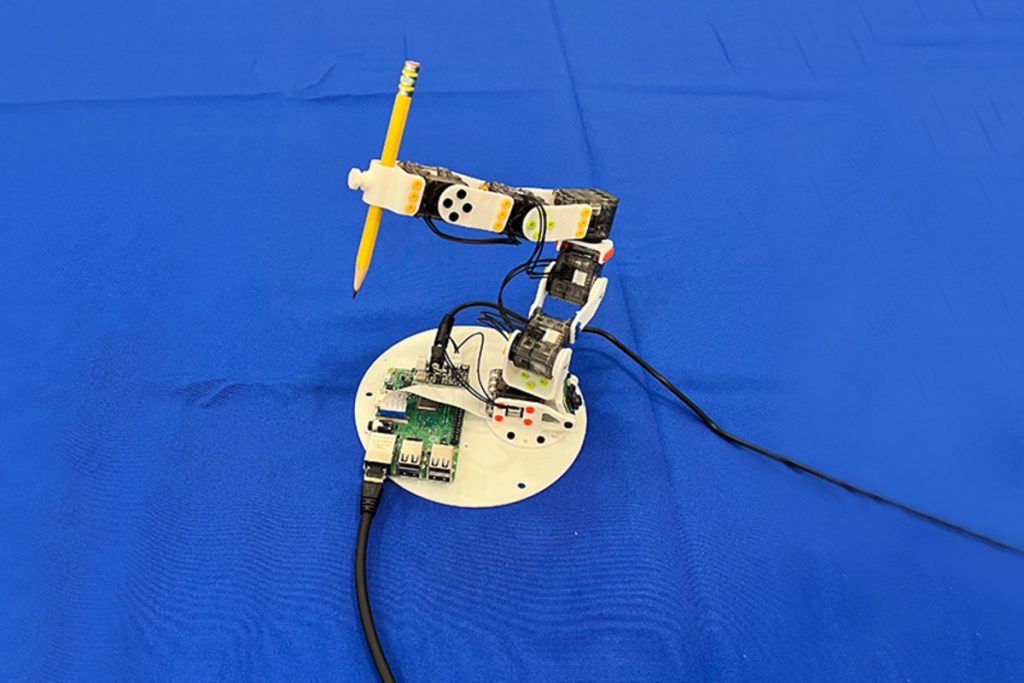

Sizing up a robot’s ability to grasp and manipulate objects typically required a slew of embedded sensors and intricate programming. However, this new approach developed by MIT scientists aims to totally simplify the process. “This work points to a shift from programming robots to teaching robots,” Sizhe Lester Li told MIT News. The vision-based system has been tested on various robotic forms, including a soft robotic hand and a 3D-printed arm, learning to control them with a surprising degree of precision.

What makes this leap in robotics fascinating is how it could democratize the field. Traditional robotics have leaned hard on rigid structures, but the NJF embraces soft, more organically inspired robots that don’t easily fit within the lines of conventional modeling. Not only does this technology aid in the design and creation of robots, but because the approach hinges solely on vision, it also allows for robots that can work in dynamic, unpredictable environments—think construction sites or agricultural settings—without the usual cadre of sophisticated sensors.

“Robotics today often feels out of reach because of costly sensors and complex programming. Our goal with Neural Jacobian Fields is to lower the barrier, making robotics affordable, adaptable, and accessible to more people,” Vincent Sitzmann, a key figure behind NJF, explained to MIT News. This is a significant stride away from hard-coded control, allowing robots to adapt and respond purely through visual feedback, potentially unlocking new possibilities in a range of applications where past models would stumble.

The findings are detailed in an open-access paper published in Nature on June 25 and suggest that in the future, ordinary people could instruct their robots just by showing them what’s needed, rather than programming them. While the NJF, at present, still requires the setup of multiple cameras for training each individual unit, the direction is clear and promising: researchers envision a time when someone could use their smartphone to teach a robot new tricks on the fly. Although the system also has its current limitations—such as a lack of force or tactile sensing—the CSAIL team is already working on improving these aspects of the robotic experience.

The potential implications for both commercial and personal robotics could be substantial, as visual learning simplifies the integration of robots into our daily lives. As the researchers continue to refine NJF, the gap between high-end, rigid robots and those capable of handling real-world tasks without the benefit of expensive, high-tech gadgetry should gradually close. For more insights on this evolving technology, the full findings are available through the MIT CSAIL’s published research.