Researchers from Stanford University today published an update to their Foundation Model Transparency Index, which looks at the transparency of popular generative artificial intelligence models such as OpenAI’s GPT family, Google LLC’s Gemini models and Meta Platforms Inc.’s Llama series.

The FMTI, which was first published in October, is designed to assess the transparency of some of the most widely used foundational large language models or LLMs. The aim is to increase accountability, address the societal impact of generative AI, and attempt to encourage developers to be more transparent about how they’re trained and the way they operate.

Created by Stanford’s Human-Centered Artificial Intelligence research group, Stanford HAI, the FMTI incorporates a wide range of metrics that consider how much developers disclose about their models, plus information on how people are using their systems.

The initial findings were somewhat negative, illustrating how most foundational LLMs are shrouded in secrecy, including the open-source ones. That said, open-source models such as Meta’s Llama 2 and BigScience’s BloomZ were notably more transparent than their closed-source peers, such as OpenAI’s GPT-4 Turbo.

Improved transparency of LLMs

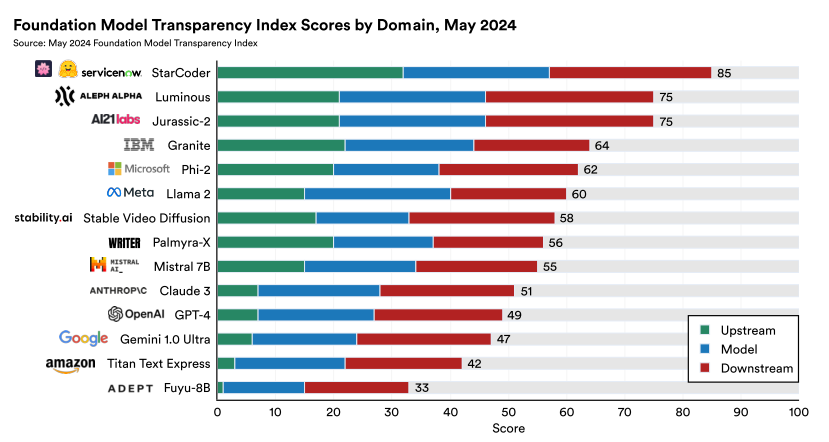

For the latest FMTI, Stanford HAI’s team of researchers evaluated 14 major foundation model developers, including OpenAI, Google, Meta Anthropic PBC, AI21 Labs Inc., IBM Corp., Mistral and Stability AI Ltd., using 233 transparency indicators. The findings were somewhat better this time around, with the researchers saying they were pleased to see a significant improvement in the level of transparency around AI models since last year.

The FMTI gives each LLM a rating of between 0 and 100, with more points equating to more transparency. Overall, the LLMs’ transparency scores were much improved from six months ago, with an average gain of 21 points across the 14 models it evaluated, HAI said. In addition, the researchers noted some considerable improvements from specific companies, with AI21Labs increasing its transparency score by 50 points, followed by Hugging Face Inc. and Amazon.com, whose scores rose by 32 points and 29 points, respectively.

A long way to go

On the downside, the authors said the gap between open- and closed-source models remains more or less the same. Whereas the median open model scores 59 points, the median closed-source model was 53.5 points. According to the researchers, these findings suggest that the open-source AI model development process is inherently more transparent than the latter, though it does not necessarily imply more transparency in terms of training data, compute or usage.

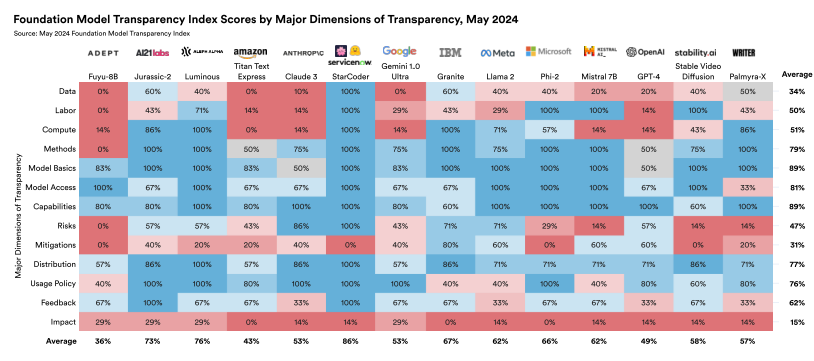

The researchers were also somewhat disappointed to see that there was little progress made in terms of transparency regarding the data that fuels LLMs, or their real-world impact. AI models developer. Specifically, AI developers continue to keep their cards close to their chest with regard to the copyrighted data they use to train their models, who has access to that data, and how effective their AI guardrails are. Moreover, few developers were willing to share what they know in terms of the downstream impact of their LLMs, such as how people are using them and where those users are located.

Moving forward

That said, Stanford HAI’s team is encouraged, not only by the progress made so far, but also by the willingness of AI developers to engage with its researchers. It reported that a number of LLM developers have even been influenced by the FTMI, which has caused them to reflect on their internal practices.

Given that the average transparency score of 58 is still somewhat low, Stanford HAI concluded that there’s still lots of room for improvement. Moreover, it urged AI developers to do this, saying transparency around AI is essential not only for public accountability and effective governance, but also for scientific innovation.

Looking forward, the researchers said, it’s asking AI developers to publish their own transparency reports for each major foundation model they release, in-line with voluntary codes of conduct recommended by the U.S. government and G7 organizations.

It also had some advice for policymakers, saying its report can help to illustrate where policy intervention might help to increase AI transparency.

Featured image: SiliconANGLE/Microsoft Designer; others: Stanford HAI

Support our open free content by sharing and engaging with our content and community.

Join theCUBE Alumni Trust Network

Where Technology Leaders Connect, Share Intelligence & Create Opportunities

11.4k+

CUBE Alumni Network

C-level and Technical

Domain Experts

Connect with 11,413+ industry leaders from our network of tech and business leaders forming a unique trusted network effect.

SiliconANGLE Media is a recognized leader in digital media innovation serving innovative audiences and brands, bringing together cutting-edge technology, influential content, strategic insights and real-time audience engagement. As the parent company of SiliconANGLE, theCUBE Network, theCUBE Research, CUBE365, theCUBE AI and theCUBE SuperStudios — such as those established in Silicon Valley and the New York Stock Exchange (NYSE) — SiliconANGLE Media operates at the intersection of media, technology, and AI. .

Founded by tech visionaries John Furrier and Dave Vellante, SiliconANGLE Media has built a powerful ecosystem of industry-leading digital media brands, with a reach of 15+ million elite tech professionals. The company’s new, proprietary theCUBE AI Video cloud is breaking ground in audience interaction, leveraging theCUBEai.com neural network to help technology companies make data-driven decisions and stay at the forefront of industry conversations.