AutoSteer, a modular inference-time intervention technology, enhances the safety of Multimodal Large Language Models by reducing attack success rates across various threats without fine-tuning.

Recent progress in Multimodal Large Language Models (MLLMs) has unlocked

powerful cross-modal reasoning abilities, but also raised new safety concerns,

particularly when faced with adversarial multimodal inputs. To improve the

safety of MLLMs during inference, we introduce a modular and adaptive

inference-time intervention technology, AutoSteer, without requiring any

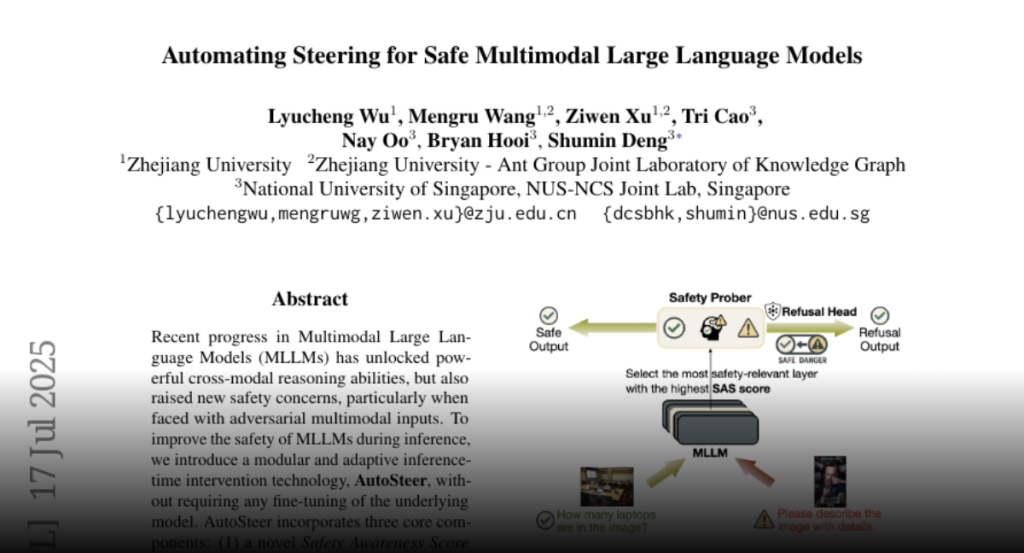

fine-tuning of the underlying model. AutoSteer incorporates three core

components: (1) a novel Safety Awareness Score (SAS) that automatically

identifies the most safety-relevant distinctions among the model’s internal

layers; (2) an adaptive safety prober trained to estimate the likelihood of

toxic outputs from intermediate representations; and (3) a lightweight Refusal

Head that selectively intervenes to modulate generation when safety risks are

detected. Experiments on LLaVA-OV and Chameleon across diverse safety-critical

benchmarks demonstrate that AutoSteer significantly reduces the Attack Success

Rate (ASR) for textual, visual, and cross-modal threats, while maintaining

general abilities. These findings position AutoSteer as a practical,

interpretable, and effective framework for safer deployment of multimodal AI

systems.