A benchmark evaluates multimodal models’ ability to interpret scientific schematic diagrams and answer related questions, revealing performance gaps and insights for improvement.

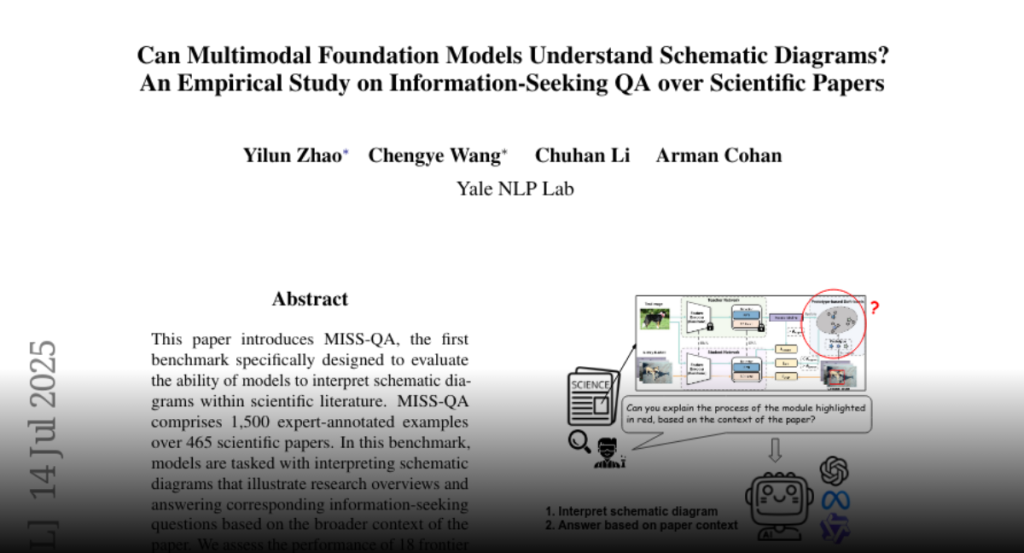

This paper introduces MISS-QA, the first benchmark specifically designed to

evaluate the ability of models to interpret schematic diagrams within

scientific literature. MISS-QA comprises 1,500 expert-annotated examples over

465 scientific papers. In this benchmark, models are tasked with interpreting

schematic diagrams that illustrate research overviews and answering

corresponding information-seeking questions based on the broader context of the

paper. We assess the performance of 18 frontier multimodal foundation models,

including o4-mini, Gemini-2.5-Flash, and Qwen2.5-VL. We reveal a significant

performance gap between these models and human experts on MISS-QA. Our analysis

of model performance on unanswerable questions and our detailed error analysis

further highlight the strengths and limitations of current models, offering key

insights to enhance models in comprehending multimodal scientific literature.