Fraud detection remains a significant challenge in the financial industry, requiring advanced machine learning (ML) techniques to detect fraudulent patterns while maintaining compliance with strict privacy regulations. Traditional ML models often rely on centralized data aggregation, which raises concerns about data security and regulatory constraints.

Fraud cost businesses over $485.6 billion in 2023 alone, according to Nasdaq’s Global Financial Crime Report, with financial institutions under pressure to keep up with evolving threats. Traditional fraud models often rely on isolated data, leading to overfitting and poor real-world performance. Data privacy laws like GDPR and CCPA further limit collaboration. With federated learning using Amazon SageMaker AI, organizations can jointly train models without sharing raw data, boosting accuracy while maintaining compliance.

In this post, we explore how SageMaker and federated learning help financial institutions build scalable, privacy-first fraud detection systems.

Federated learning with the Flower framework on SageMaker AI

With federated learning, multiple institutions can train a shared model while keeping their data decentralized, addressing privacy and security concerns in fraud detection. A key advantage of this approach is that it mitigates the risk of overfitting by learning from a wider distribution of fraud patterns across various datasets. It allows financial institutions to collaborate while maintaining strict data privacy, making sure that no single entity has access to another’s raw data. This not only improves fraud detection accuracy but also adheres to industry regulations and compliance requirements.

Popular frameworks for federated learning include Flower, PySyft, TensorFlow Federated TFF, and FedML. Among these, the Flower framework stands out for being framework-agnostic, a key advantage that allows it to seamlessly integrate with a wide range of tools such as PyTorch, TensorFlow, Hugging Face, scikit-learn, and more.

Although SageMaker is powerful for centralized ML workflows, Flower is purpose-built for decentralized model training, enabling secure collaboration across data silos without exposing raw data. When deployed on SageMaker, Flower takes advantage of the cloud system’s scalability and automation while enabling flexible, privacy-preserving federated learning workflows. This combination improves time to production, reduces engineering complexity, and supports strict data governance, making it highly suitable for cross-institutional or regulated environments.

Generating synthetic data with Synthetic Data Vault

To strengthen fraud detection while preserving data privacy, organizations can use the Synthetic Data Vault (SDV), a Python library that generates realistic synthetic datasets reflecting real-world patterns. Teams can use SDV to simulate diverse fraud scenarios without exposing sensitive information, helping federated learning models generalize better and detect subtle, evolving fraud tactics. It also helps address data imbalance by amplifying underrepresented fraud cases, improving model accuracy and robustness.

Beyond data generation, SDV captures complex statistical relationships and accelerates model development by reducing dependence on expensive, hard-to-obtain labeled data. In our approach, synthetic data is used primarily as a validation dataset, supporting privacy and consistency across environments, and training datasets can be real or synthetic depending on audit and compliance requirements. This flexibility supports privacy-by-design principles while maintaining adaptability in regulated environments.

A fair evaluation approach for federated learning models

A critical aspect of federated learning is facilitating a fair and unbiased evaluation of trained models. To achieve this, organizations must adopt a structured dataset strategy. As illustrated in the following figure, Dataset A and Dataset B are used as separate training datasets, with each participating institution contributing distinct datasets that capture different fraud patterns. Instead of evaluating the model using only one dataset, a combined dataset of A and B is used for evaluation. This makes sure that the model is tested on a more comprehensive distribution of real-world fraud cases, helping reduce bias and improve fairness in assessment.

By adopting this evaluation method, organizations can validate the model’s ability to generalize across different data distributions. This approach makes sure fraud detection models aren’t overly reliant on a single institution’s data, improving robustness against evolving fraud tactics. Standard evaluation metrics such as precision, recall, F1-score, and AUC-ROC are used to measure model performance. In the insurance sector, particular attention is given to false negatives—cases where fraudulent claims are missed—because these directly translate to financial losses. Minimizing false negatives is critical to protect against undetected fraud, while also making sure the model performs consistently and fairly across diverse datasets in a federated learning environment.

Solution overview

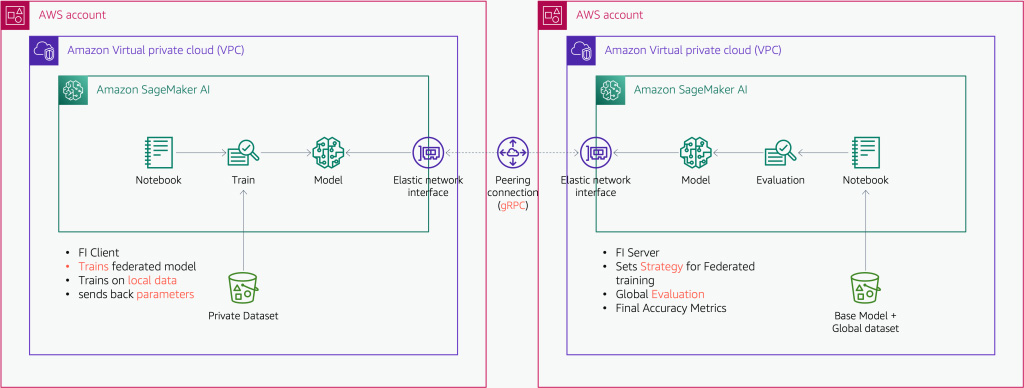

The following diagram illustrates how we implemented this approach across two AWS accounts using SageMaker AI and cross-account virtual private cloud (VPC) peering.

Flower supports a wide range of ML frameworks, including PyTorch, TensorFlow, Hugging Face, JAX, Pandas, fast.ai, PyTorch Lightning, MXNet, scikit-learn, and XGBoost. When deploying federated learning on SageMaker, Flower enables a distributed setup where multiple institutions can collaboratively train models while keeping data private. Each participant trains a local model on its own dataset and shares only model updates—not raw data—with a central server. SageMaker orchestrates the complete training, validation, and evaluation process securely and efficiently. The final model remains consistent with the original framework, making it deployable to a SageMaker endpoint using its supported framework container.

To facilitate a smooth and scalable implementation, SageMaker AI provides built-in features for model orchestration, hyperparameter tuning, and automated monitoring. Institutions can continuously improve their models based on the latest fraud patterns without requiring manual updates. Additionally, integrating SageMaker AI with AWS services such as AWS Identity and Access Management (IAM) enhances security and compliance.

For more information, refer to the Flower Federated Learning Workshop, which provides detailed guidance on setting up and running federated learning workloads effectively. By integrating federated learning, synthetic data generation, and structured evaluation strategies, you can develop robust fraud detection systems that are both scalable and privacy-preserving.

Results and key takeaways

The implementation of federated learning for fraud detection has demonstrated significant improvements in model performance and fraud detection accuracy. By training on diverse datasets, the model captures a broader range of fraud patterns, helping reduce bias and overfitting. The incorporation of SDV-generated datasets facilitates a well-rounded training process, improving generalization to real-world fraud scenarios. The federated learning framework on SageMaker enables organizations to scale their fraud detection models while maintaining compliance with data privacy regulations.

Through this approach, organizations have observed a reduction in false positives, helping fraud analysts focus on high-risk transactions more effectively. The ability to train models on a wider range of fraud patterns across multiple institutions has led to a more comprehensive and accurate fraud detection system. Future optimizations might include refining synthetic data techniques and expanding federated learning participation to further enhance fraud detection capabilities.

Conclusion

The Flower framework provides a scalable, privacy-preserving approach to fraud detection by using federated learning on SageMaker AI. By combining decentralized training, synthetic data generation, and fair evaluation strategies, financial institutions can enhance model accuracy while maintaining compliance with regulations. Shin Kong Financial Holding and Shin Kong Life successfully adopted this approach, as highlighted in their official blog post. This methodology sets a new standard for financial security applications, paving the way for broader adoption of federated learning.

Although using Flower on SageMaker for federated learning offers strong privacy and scalability benefits, there are some limitations to consider. Technically, managing heterogeneity across clients (such as different data schemas, compute capacities, or model architectures) can be complex. From a use case perspective, federated learning might not be ideal for scenarios requiring real-time inference or highly synchronous updates, and it depends on stable connectivity across participating nodes. To address these challenges, organizations are exploring the use of high-quality synthetic datasets that preserve data distributions while protecting privacy, improving model generalization and robustness. Next steps include experimenting with these datasets, using the Flower Federated Learning Workshop for hands-on guidance, reviewing the system architecture for deeper understanding, and engaging with the AWS account team to tailor and scale your federated learning solution.

About the Authors

Ray Wang is a Senior Solutions Architect at AWS. With 12 years of experience in the backend and consultant, Ray is dedicated to building modern solutions in the cloud, especially in especially in NoSQL, big data, machine learning, and Generative AI. As a hungry go-getter, he passed all 12 AWS certificates to increase the breadth and depth of his technical knowledge. He loves to read and watch sci-fi movies in his spare time.

Ray Wang is a Senior Solutions Architect at AWS. With 12 years of experience in the backend and consultant, Ray is dedicated to building modern solutions in the cloud, especially in especially in NoSQL, big data, machine learning, and Generative AI. As a hungry go-getter, he passed all 12 AWS certificates to increase the breadth and depth of his technical knowledge. He loves to read and watch sci-fi movies in his spare time.

Kanwaljit Khurmi is a Principal Solutions Architect at Amazon Web Services. He works with AWS customers to provide guidance and technical assistance, helping them improve the value of their solutions when using AWS. Kanwaljit specializes in helping customers with containerized and machine learning applications.

Kanwaljit Khurmi is a Principal Solutions Architect at Amazon Web Services. He works with AWS customers to provide guidance and technical assistance, helping them improve the value of their solutions when using AWS. Kanwaljit specializes in helping customers with containerized and machine learning applications.

James Chan is a Solutions Architect at AWS specializing in the Financial Services Industry (FSI). With extensive experience in financial services, Fintech, and manufacturing sectors, James help FSI customers at AWS innovate and build scalable cloud solutions and financial system architectures. James specialize in AWS container, network architecture, and generative AI solutions that combine cloud-native technologies with strict financial compliance requirements.

James Chan is a Solutions Architect at AWS specializing in the Financial Services Industry (FSI). With extensive experience in financial services, Fintech, and manufacturing sectors, James help FSI customers at AWS innovate and build scalable cloud solutions and financial system architectures. James specialize in AWS container, network architecture, and generative AI solutions that combine cloud-native technologies with strict financial compliance requirements.

Mike Xu is an Associate Solutions Architect specializing in AI/ML at Amazon Web Services. He works with customers to design machine learning solutions using services like Amazon SageMaker and Amazon Bedrock. With a background in computer engineering and a passion for generative AI, Mike focuses on helping organizations accelerate their AI/ML journey in the cloud. Outside of work, he enjoys producing electronic music and exploring emerging tech.

Mike Xu is an Associate Solutions Architect specializing in AI/ML at Amazon Web Services. He works with customers to design machine learning solutions using services like Amazon SageMaker and Amazon Bedrock. With a background in computer engineering and a passion for generative AI, Mike focuses on helping organizations accelerate their AI/ML journey in the cloud. Outside of work, he enjoys producing electronic music and exploring emerging tech.