Late last month IBM issued a white paper (Framework for Quantum Advantage) describing the expectations and ingredients it suggests are required for achieving quantum advantage.

IBM argues, “As quantum computing approaches the threshold where certain tasks demonstrably outpace their classical machines, the need for a precise, clear, consensus-driven definition of quantum advantage becomes essential. Rapid progress in the field has blurred this term across companies, architectures, and application domains. Here, we aim to articulate an operational definition for quantum advantage that is both platform-agnostic and empirically verifiable. Building on this framework, we highlight the algorithmic families most likely to achieve early advantage.”

It’s an interesting proposition. Some would argue that pinning down QA’s ingredients may be superfluous in the sense that any achievement of QA will have a varying mix of things built-in (cost, performance, accuracy, access, etc.), and that those things won’t be identical for every application. Instead, the best measure will basically be outperforming classical computing for the same application. In other words, results will define QA, not a specific prescription.

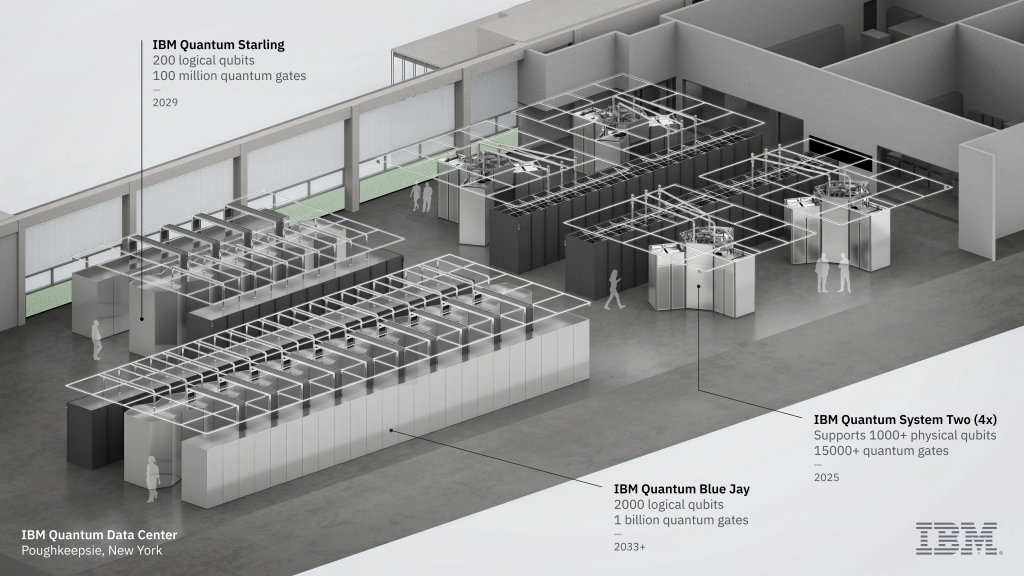

That said the IBM paper does a nice job framing the QA concept and elements. It tackles likely problem areas suitable for QA; needed operational characteristics such as error correction and error mitigation; and has brief descriptions of promising platform types, including non-IBM approaches. (For a more complete look at IBM’s quantum strategy see HPCwire coverage, IBM Sets 2029 Target for Fault-Tolerant Quantum Computing)

“We outline the general definition of quantum advantage and the criteria that are necessary to claim it, as well as the necessary elements to achieve it: methods of running accurate quantum circuits, HPC + quantum infrastructure, and performant quantum hardware. It is also crucial to focus our attention on algorithms that we know can be run reliably and have verifiably correct outputs, thus, we also outline the collection of algorithms that fit this description,” write IBM researchers.

IBM says three key families of “computational problems currently stand out as promising avenues for realizing quantum advantage: i) problems that are solved via sampling algorithms, ii) problems that are verifiable via the variational principle, and iii) problems whose solution reduces to calculating expectation values of observables.” The researchers look at each family in some detail noting strengths and advances.

The latest IBM piece is an interesting addition to the QA conversation, and broadly-speaking is a short read. The conclusion is worth reading in full:

“We maintain that credible evidence of quantum advantage is likely to emerge in one of the areas highlighted here within the next two years, contingent on sustained coordination between the high-performance-computing and quantum computing communities, and most likely in the areas we have highlighted here.

“Once these claims begin to emerge, they must be assessed on a common basis according to the two criteria articulated in this article. Furthermore, quantum advantage is unlikely to materialize as a single, definitive milestone. Instead, it should be understood as a sequence of increasingly robust demonstrations. Any claim of quantum advantage should be framed as a falsifiable scientific hypothesis, one that invites scrutiny, reproduction, and challenge from the broader community. A cautious iterative and interpretive stance is therefore essential until state-of-the-art classical methods have had adequate opportunity to challenge the claim. However, should a classical approach later supersede a previously reported quantum advantage benchmark, the outcome does not signify failure; rather, it exemplifies constructive behavior that drives progress in both classical and quantum computation.

“Finally, we envision that the following best practices will be necessary to underpin credible advantage claims: (i) definition of standardized benchmark problems jointly with classical experts to ensure relevance and fairness, (ii) publication of detailed methodologies and datasets, sufficient to enable reproducibility of the benchmarks, and (iii) maintenance of an open-access leaderboards to track evolving performance. These practices will help ensure that quantum advantage claims are not only credible, but also constructive for the broader computational sciences.”

No doubt there will be a flurry QA claims in the coming years. Being able to quickly and accurately assess them should help quantum computing make the transition to becoming a practical tool easier and increase user confidence. Having a checklist of QA attributes to review can’t hurt, but one expects bottom line performance versus a classical system to quickly become the real arbiter.

Link to IBM paper, https://arxiv.org/pdf/2506.20658