Editor’s Note: While ISC is traditionally a high performance computing conference, this year’s opening keynote made it clear that AI is now central to the future of scientific discovery. In this recap from John Russell at our sister publication HPCwire, you’ll hear directly from AMD and Oak Ridge leaders about how AI and HPC are converging to enable breakthrough research, from quantum chemistry to next-generation aircraft engines, and why energy efficiency, memory bandwidth, and architectural flexibility will shape what’s possible in the future. For those following the infrastructure demands and scientific potential of AI, this keynote offers a compelling window into what’s coming. — Jaime Hampton, Managing Editor, AIwire.

The sprawling opening ISC2025 keynote, presented by Mark Papermaster, CTO of AMD, and Scott Atchley, CTO of the National Center for Computational Science and Oak Ridge Leadership Computing Facility (OLCF), presented a wonderful picture of the power of HPC-AI technology to enable science, and a fearsome reminder of the coming energy consumption crunch required to support these achievements and our future aspirations.

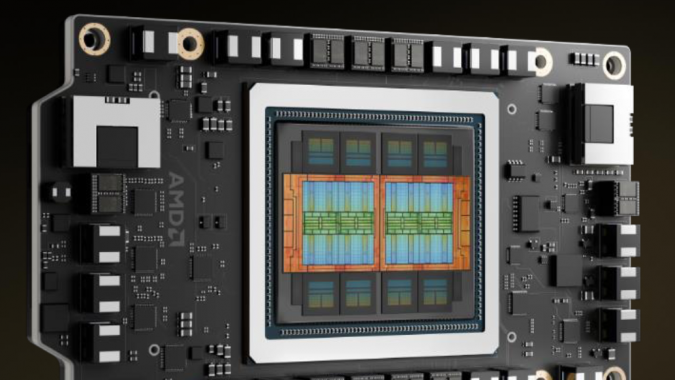

It was a rare twin peek at advanced HPC-AI technology and its fruit. Papermaster, not surprisingly, highlighted the success of AMD’s CPU-GPU pairings in the current exascale systems (El Capitan and Frontier), and teased the audience, noting AMD would introduce its latest Instinct GPU – the MI350 series – later in the week.

“I’ve been involved in this technology development for decades, but I think you would have to agree with me that AI is truly a transformative technology, a transformative application, and it builds it builds on the technology of HPC and of science that this community has been driving for decades,” Papermaster told the audience. “These computing approaches are absolutely intertwined, and we’re just starting to see the way that AI and its new applications can change the things we do actually change most everything that we do.”

Few would argue the point.

While Papermaster’s talk exuded optimism, he acknowledged the slowing of Moore’s law and argued a holistic approach to design was needed to maintain progress.

In his presentation, Atchley provided a glimpse into the U.S. Department of Energy lab organization and snapshot of OLCF, and singled out for examples of science enabled by exascale computing (Frontier). He looked at computational work on developing fuel-efficient jet engines; retro-propulsion systems for Mars mission; simulations paving the way to turn diamonds into BC8, the hardest form of carbon; and quantum chemistry calculations for use in drug design. The last is from a 2024 Gordon Bell Prize winner.

He also noted how DOE is already heavily using AI and argued that the convergence in scientific computing will only grow, citing efforts that link to quantum computing resources.

“I’ll tell you a little bit about OLCF and Frontier, some of the science we’re doing on Frontier, and then what do we see coming after that? Oak Ridge National Lab is one of 17 national labs in the US. On the computing side, there are two different branches. There’s the NNSA (National Nuclear Security Administration) that do stockpile stewardship, and then there’s the open science side, and that’s where we reside. We’re in the National Center for Computational Science, and the Oak Ridge Leadership Computing Facility runs these large systems on behalf of the Department of Energy,” Atchley said.

“For OLCF, we have over 2000 users. They come from 25 countries around the world, over 300 institutions. In the last five years, we’ve had over 175 industrial partner projects. About half of our users are academia, about 40% come from the US federal government, and about 10% from industry. We have three large allocation programs. These are very competitive, the largest of which is INCITE, and that allocates about 60% of our cycles. It is a leadership program, which means that in order to get time to this program, you have to be able to use at least 20% of the system, but ideally the whole system,” said Atchley.

ISC recorded the keynote and will maintain access to the recording through the end of the year. (Link to recording).

Let’s start with Papermaster‘s comments. While he covered a wide swath of technology issues — chip design, flexible use of mixed precision math, need for openness (standards), — his themes were around increased efficiency, particularly energy efficiency, and holistic design, and the need to develop new energy sources. Bravely (perhaps), Papermaster even had a slide mentioning Zettascale, though that target still seems distant.

On chip design, he noted AMD’s first product to come off TSMC’s 2nm manufacturing process. “It’s a CPU product, it’s a gate all around technology, [and] drives excellent efficiency of computing per watt of energy, but these new nodes are much more expensive, and they take longer to manufacture. So, you know, again, we need to continue the kind of packaging innovation that we have had, and again, continue to lean in an extensive hardware/software co-optimization,” he said.

Calling it domain-specific computation, Papermaster emphasized the need to embrace a variety of mixed-precision execution.

He said, “Obviously, the IEEE floating points are incredibly capable [and] it has the accuracy we need. It has many, many software libraries that we’ve developed over the years. And it’s not going away. But you see in the upper right (slide above), it demands more bits. So [for] every computation you have more bits that you are processing, and if you look at the lower curve, it has lower efficiency than if we could go with reduced precision.” There’s a pressing need to find areas to apply reduced precision. “It drives dramatic compute productivity increase, and it does so at a much higher energy efficiency.”

Papermaster noted increasing GPU use puts an added burden on memory and emphasizes the need for improved locality, though just what that means isn’t always clear.

“If you think about the GPU portion of our computation, the parallel computation that we drive across HPC and AI, we really are needing to double the GPU flop performance every two years, and that, in turn, must be supported by a doubling of memory as well as network bandwidth. Network bandwidth, as you see at the curve at the upper right of this chart,” he said

“What’s the effect of that? What it’s driving is an increase in the high bandwidth memory that we need to bring in very close to that GPU, and that, in turn, drives higher HBM stacks. It drives more IO to be able to connect that. It’s actually creating the larger module size. And as you see in the lower right, as we go to larger and larger module size, it’s driving the converse of what we need is driving much higher power to support our computation need. And so we really have to strive to get more locality of our computation,” said Papermaster.

As you go from the lowest level of integration all the way to rack scale, there’s a vast difference of the energy, the joule of energy expended through bit of transfer. “It’s 4000 times greater by the time you reach rack scale than it is if you had the locality of that bit being transferred from a register file right to the cache adjacent to it,” he said.

The need to drive efficiency to help control spiraling energy consumption was hammered throughout Papermaster’s comments. It’s worth it to watch the full video.

Turning to Atchley’s comments, he showcased advanced HPC-AI done on Frontier as proof points of the kinds of difficult problems leadership systems can tackle.

One example was from GE aerospace on a partner project with Safran Aircraft Engines in France. The goal is to build an engine that is 20% more fuel efficient. It turns out, the way that engines get more efficient is becoming wider. If you look back at photos of commercial jets in the 60s, the engines were very narrow and very long. But if you flew here (to ISC2025) and looked at the engine on your plane, what you notice is it’s very wide but very short. As the engines get wider, they get more efficient, but at a certain point the gains from making the engine wider are lost because of the extra drag on the shell around the engine.

“The way to get around that is to get rid of the shell,” noted Atchley. “This is not a new idea. This idea has been known for 50 years. The problem is noise. If you were to fly on that plane with these engines, when you got to your destination, you would be deaf. The problem is, how do we get rid of the noise? And noise is turbulence, and turbulence is very difficult to model. They’ve been working on this since Titan. So Mark mentioned our Titan system with the first one with GPUs. At scale, it was almost 19,000 nodes with the Kepler GPUs. They worked on this, and then on our next system Summit, which also had NVIDIA GPUs with the Voltas, they made progress, but they still couldn’t understand what was going on.”

“With Frontier now there is enough memory and enough resolution, enough performance for them to understand what’s going on with these vortices, and now they can start to make design changes to reduce that noise. GE has told us that this work on Frontier has accelerated their timeline by many years. They’re now working on, how do they model the whole engine? This engine is so large it will not fit in any wind tunnel on Earth, and so the only way they can simulate this is in silico, and they’re doing that on frontier.”

While that example emphasized a high-precision compute-intensive workload, Atchley emphasized the OLCF and DOE have long been building in AI capability.

“So we’re very engaged in AI for science last year at Supercomputing [SC24]. Three of the Gordon Bell finalists used lower precision on Frontier. Two of them used AI. One of them just used lower precision to get speed-up, and then that first one was the special prize winner. I mentioned this because it’s not just flops, like on mod-sim, it’s not just high precision flops. You need memory capacity. You need memory bandwidth. Scale up bandwidth and scale up bandwidth,” he said.

So what comes next?

“What are we looking beyond Frontier, beyond exascale? Well, modeling and simulation is not going away. We can expect this community to continue to grow; they need bandwidth. They also need support for — FP64 if you don’t have data to train with, you need to generate that data, and you need to use FP64 to do that. AI for science is a huge importance right now within the National Lab community, again, you need bandwidth everywhere from the processors out through the scale out network. You need lots of low precision flops.

“You also need a storage system that can cater to the AI workload. It’s a more read-heavy, small, random IO versus large, write access like or write patterns like we have with Mod sim. We want the system be able to connect to quantum computers, so that you can do hybrid classical quantum computing,” noted Atchley.

Both presentations were rich, as was the Q&A. Link to video.

Top Image: Picture of AMD’S MI350 series GPU shown by Papermaster

This article first appeared on HPCwire.