A novel benchmark and dataset are proposed for multi-modal summarization of UI instructional videos, addressing the need for step-by-step executable instructions and key video frames.

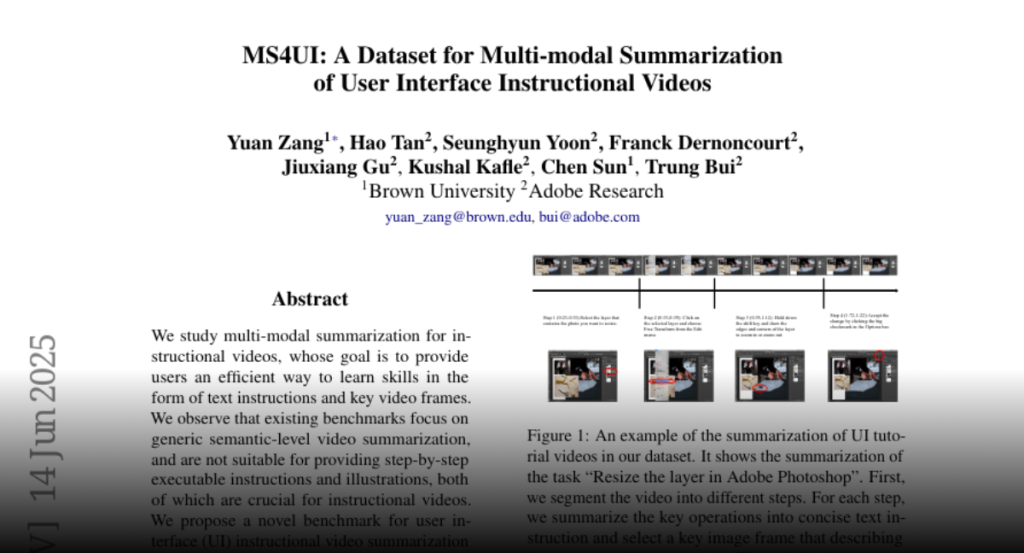

We study multi-modal summarization for instructional videos, whose goal is to

provide users an efficient way to learn skills in the form of text instructions

and key video frames. We observe that existing benchmarks focus on generic

semantic-level video summarization, and are not suitable for providing

step-by-step executable instructions and illustrations, both of which are

crucial for instructional videos. We propose a novel benchmark for user

interface (UI) instructional video summarization to fill the gap. We collect a

dataset of 2,413 UI instructional videos, which spans over 167 hours. These

videos are manually annotated for video segmentation, text summarization, and

video summarization, which enable the comprehensive evaluations for concise and

executable video summarization. We conduct extensive experiments on our

collected MS4UI dataset, which suggest that state-of-the-art multi-modal

summarization methods struggle on UI video summarization, and highlight the

importance of new methods for UI instructional video summarization.