A framework called Micro-Act addresses Knowledge Conflicts in Retrieval-Augmented Generation by adaptively decomposing knowledge sources, leading to improved QA accuracy compared to existing methods.

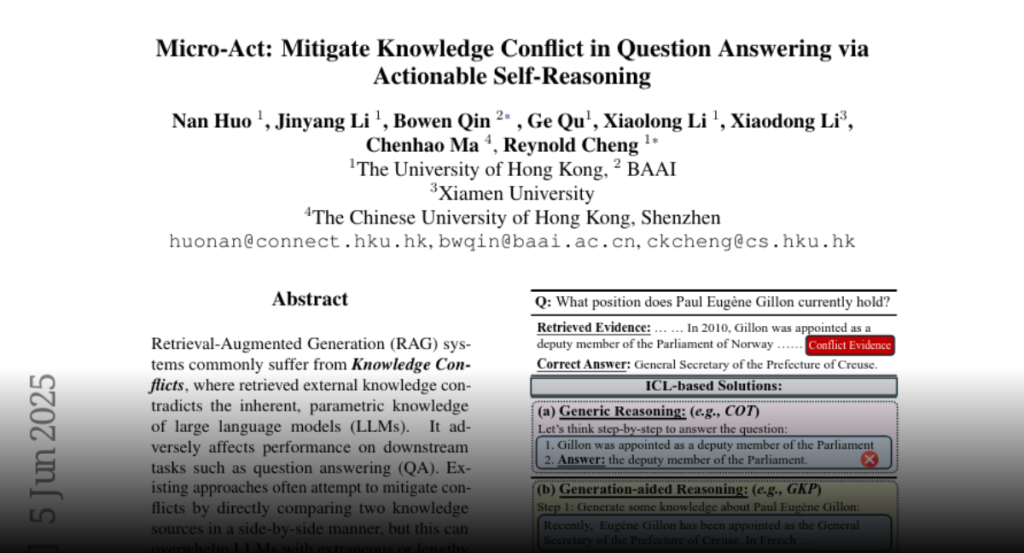

Retrieval-Augmented Generation (RAG) systems commonly suffer from Knowledge

Conflicts, where retrieved external knowledge contradicts the inherent,

parametric knowledge of large language models (LLMs). It adversely affects

performance on downstream tasks such as question answering (QA). Existing

approaches often attempt to mitigate conflicts by directly comparing two

knowledge sources in a side-by-side manner, but this can overwhelm LLMs with

extraneous or lengthy contexts, ultimately hindering their ability to identify

and mitigate inconsistencies. To address this issue, we propose Micro-Act a

framework with a hierarchical action space that automatically perceives context

complexity and adaptively decomposes each knowledge source into a sequence of

fine-grained comparisons. These comparisons are represented as actionable

steps, enabling reasoning beyond the superficial context. Through extensive

experiments on five benchmark datasets, Micro-Act consistently achieves

significant increase in QA accuracy over state-of-the-art baselines across all

5 datasets and 3 conflict types, especially in temporal and semantic types

where all baselines fail significantly. More importantly, Micro-Act exhibits

robust performance on non-conflict questions simultaneously, highlighting its

practical value in real-world RAG applications.