Hanabi AI, a pioneering voice technology startup, today announced OpenAudio S1, the world’s first AI voice actor and a breakthrough generative voice model that delivers unprecedented real-time emotional and tonal control. Moving beyond the limitations of traditional text-to-speech solutions, OpenAudio S1 creates nuanced, emotionally authentic vocal output that captures the full spectrum of human expression. The OpenAudio S1 model is available in open beta today on fish.audio, for everyone to try for free.

This press release features multimedia. View the full release here: https://www.businesswire.com/news/home/20250603787428/en/

“We believe the future of AI voice-driven storytelling isn’t just about generating speech—it’s about performance,” said Shijia Liao, founder and CEO of Hanabi AI. “With OpenAudio S1, we’re shaping what we see as the next creative frontier: AI voice acting.”

From Synthesized Text-to-Speech Output to AI Voice Performance

At the heart of OpenAudio S1’s innovation is transforming voice from merely a functional tool into a core element of storytelling. Rather than treating speech as a scripted output to synthesize, Hanabi AI views it as a performance to direct—complete with emotional depth, intentional pacing, and expressive nuance. Whether it’s the trembling hesitation of suppressed anxiety before delivering difficult news, or the fragile excitement of an unexpected reunion, OpenAudio S1 allows users to control and fine tune vocal intensity, emotional resonance, and prosody in real time making voice output not just sound realistic, but feel authentically human.

“Voice is one of the most powerful ways to convey emotion, yet it’s the most nuanced, the hardest to replicate, and the key to making machines feel truly human,” Liao emphasized, “But it’s been stuck in a text-to-speech mindset for too long. Ultimately, the difference between machine-generated speech and human speech comes down to emotional authenticity. It’s not just what you say but how you say it. OpenAudio S1 is the first AI speech model that gives creators the power to direct voice acting as if they were working with a real human actor.”

State-of-the-Art Model Meets Controllability and Speed

Hanabi AI fuels creative vision with a robust technical foundation. OpenAudio S1 is powered by an end-to-end architecture with 4 billion parameters, trained extensively on diverse text and audio datasets. This advanced setup empowers S1 to capture emotional nuance and vocal subtleties with remarkable accuracy. Fully integrated into the fish.audio platform, S1 is accessible to a broad range of users—from creators generating long-form content in minutes to creative professionals fine-tuning every vocal inflection.

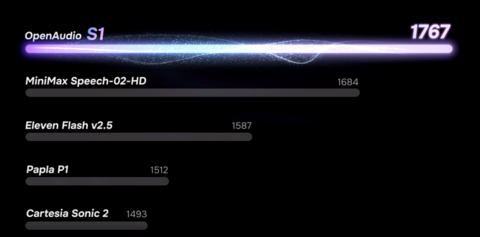

According to third-party benchmarks from Hugging Face’s TTS Arena, OpenAudio S1 demonstrated consistent gains across key benchmarks, outperforming ElevenLabs, OpenAI, and Cartesia in key areas:

Expressiveness – S1 delivers more nuanced emotional expression and tonal variation, handling subtleties like sarcasm, joy, sadness, and fear with cinematic depth, unlike the limited emotional scope of current competing models.

Ultra-low latency – S1 offers sub-100ms latency, making it ideal for real-time applications like gaming, voice assistants, and live content creation where immediate response time is crucial. Competitors, like Cartesia and OpenAI, still experience higher latency, resulting in a less natural, more robotic response in real-time interactive settings.

Real-time fine-grained controllability – With S1, users can adjust tone, pitch, emotion, and pace in real time, using not only simple prompts such as (angry) or (voice quivering), but also a diverse range of more nuanced or creative instructions such as (confident but hiding fear) or (whispering with urgency). This allows for incredibly flexible and expressive voice generation tailored to a wide range of contexts and characters.

State-of-the-art voice cloning – Accurately replicates a speaker’s rhythm, pacing, and timbre.

Multilingual, multi-speaker fluency – S1 seamlessly performs across 11 languages, excelling at handling multi-speaker environments (such as dialogues with multiple characters) in multilingual contexts, supporting seamless transitions between different languages without losing tonal consistency.

Pioneering Research Vision For the Future

OpenAudio S1 is just the first chapter. Hanabi’s long-term mission is to build a true AI companion that doesn’t just process information but connects with human emotion, intent, and presence. While many voice models today produce clear speech they still fall short of true emotional depth, and struggle to support the kind of trust, warmth, and natural interaction required of an AI companion. Instead of treating voice as an output layer, Hanabi treats it as the emotional core of the AI experience, because for an AI companion to feel natural, its voice must convey real feeling and connection.

To bring this vision to life, Hanabi advances both research and product in parallel. The company operates through two complementary divisions: OpenAudio, Hanabi’s internal research lab, focuses on developing breakthrough voice models and advancing emotional nuance, real-time control, and speech fidelity. Meanwhile, Fish Audio serves as Hanabi’s product arm, delivering a portfolio of accessible applications that bring these technological advancements directly to consumers.

Looking ahead, the company plans to progressively release core parts of OpenAudio’s architecture, training pipeline, and inference stack to the public.

Real-World Impact with Scalable Innovation

With a four-people Gen Z founding team, the company scaled its annualized revenue from $400,000 to over $5 million between January and April 2025, while growing its MAU from 50,000 to 420,000 through Fish Audio’s early products—including real-time performance tools and long-form audio generation. This traction reflects the team’s ability to turn cutting-edge innovation into product experiences that resonate with a fast-growing creative community.

The founder & CEO, Shijia Liao, has spent over seven years in the field and been active in open-source AI development. Prior to Fish Audio, he led or participated in the development of several widely adopted speech and singing voice synthesis models—including So-VITS-SVC, GPT-SoVITS, Bert-VITS2, and Fish Speech—which remain influential in the research and creative coding communities today. That open-source foundation built both the technical core and the community trust that now powers fish.audio’s early commercial momentum.

For a deeper dive into the research and philosophy behind OpenAudio S1, check out our launch blog post here: https://openaudio.com/blogs/s1

Pricing & Availability

Premium Membership (unlimited generation on Fish Audio Playground):

– $15 per month

– $120 per year

API: $15 per million UTF-8 bytes (approximately 20 hours of audio)

About Hanabi AI

Hanabi AI Inc. is pioneering the era of the AI Voice Actor—speech that you can direct as easily as video, shaping every inflection, pause, and emotion in real time. Built on our open-source roots, the Fish Audio platform gives filmmakers, streamers, and everyday creators frame-perfect control over how their stories sound.

View source version on businesswire.com: https://www.businesswire.com/news/home/20250603787428/en/