The work introduces a dataset and model for generating high-quality, multi-layer transparent images using diffusion models and a novel synthesis pipeline.

Generating high-quality, multi-layer transparent images from text prompts can

unlock a new level of creative control, allowing users to edit each layer as

effortlessly as editing text outputs from LLMs. However, the development of

multi-layer generative models lags behind that of conventional text-to-image

models due to the absence of a large, high-quality corpus of multi-layer

transparent data. In this paper, we address this fundamental challenge by: (i)

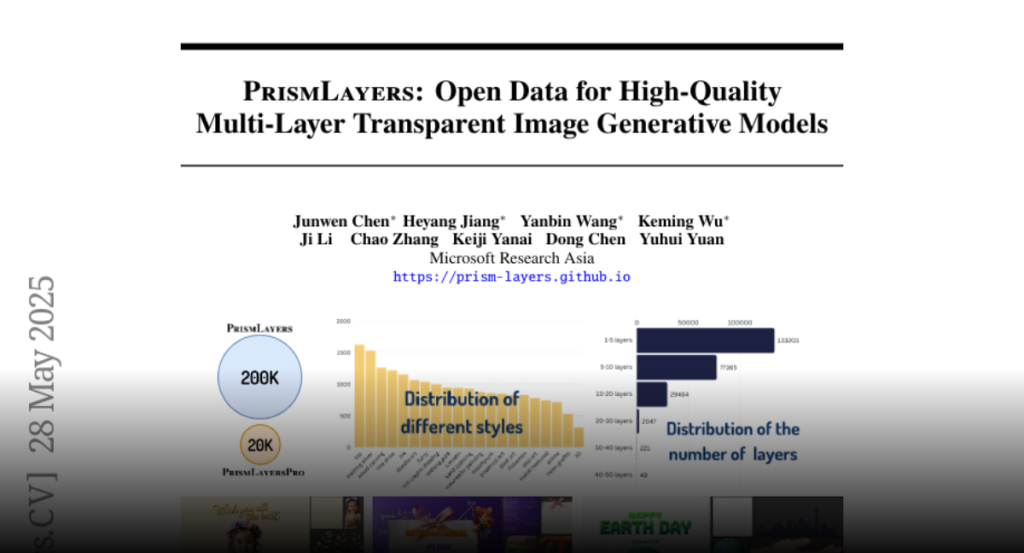

releasing the first open, ultra-high-fidelity PrismLayers (PrismLayersPro)

dataset of 200K (20K) multilayer transparent images with accurate alpha mattes,

(ii) introducing a trainingfree synthesis pipeline that generates such data on

demand using off-the-shelf diffusion models, and (iii) delivering a strong,

open-source multi-layer generation model, ART+, which matches the aesthetics of

modern text-to-image generation models. The key technical contributions

include: LayerFLUX, which excels at generating high-quality single transparent

layers with accurate alpha mattes, and MultiLayerFLUX, which composes multiple

LayerFLUX outputs into complete images, guided by human-annotated semantic

layout. To ensure higher quality, we apply a rigorous filtering stage to remove

artifacts and semantic mismatches, followed by human selection. Fine-tuning the

state-of-the-art ART model on our synthetic PrismLayersPro yields ART+, which

outperforms the original ART in 60% of head-to-head user study comparisons and

even matches the visual quality of images generated by the FLUX.1-[dev] model.

We anticipate that our work will establish a solid dataset foundation for the

multi-layer transparent image generation task, enabling research and

applications that require precise, editable, and visually compelling layered

imagery.