Have you Googled something recently only to be met with a cute little diamond logo above some magically-appearing words? Google’s AI Overview combines Google Gemini’s language models (which generate the responses) with Retrieval-Augmented Generation, which pulls the relevant information.

In theory, it’s made an incredible product, Google’s search engine, even easier and faster to use.

However, because the creation of these summaries is a two-step process, issues can arise when there is a disconnect between the retrieval and the language generation.

You may like

While the retrieved information might be accurate, the AI can make erroneous leaps and draw strange conclusions when generating the summary.

That’s led to some famous gaffs, such as when it became the laughing stock of the internet in mid-2024 for recommending glue as a way to make sure cheese wouldn’t slide off your homemade pizza. And we loved the time it described running with scissors as “a cardio exercise that can improve your heart rate and require concentration and focus”.

These prompted Liz Reid, Head of Google Search, to publish an article titled About Last Week, stating these examples “highlighted some specific areas that we needed to improve”. More than that, she diplomatically blamed “nonsensical queries” and “satirical content”.

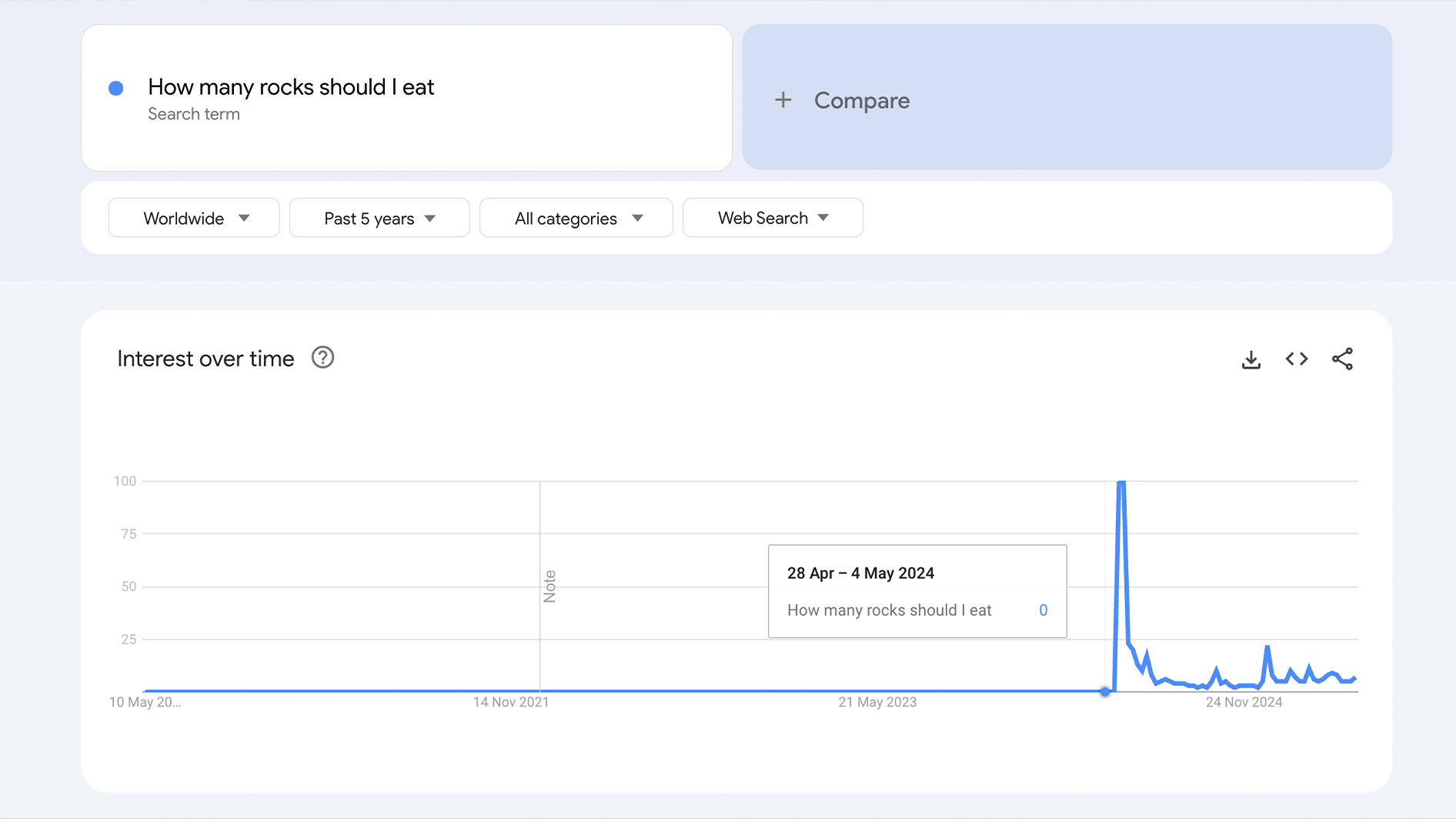

She was at least partly right. Some of the problematic queries were purely highlighted in the interests of making AI look stupid. As you can see below, the query “How many rocks should I eat?” wasn’t a common search before the introduction of AI Overviews, and it hasn’t been since.

However, almost a year on from the pizza-glue fiasco, people are still tricking Google’s AI Overviews into fabricating information or “hallucinating” – the euphemism for AI lies.

Many misleading queries seem to be ignored as of writing, but just last month it was reported by Engadget that the AI Overviews would make up explanations for pretend idioms like “you can’t marry pizza” or “never rub a basset hound’s laptop”.

So, AI is often wrong when you intentionally trick it. Big deal. But, now that it’s being used by billions and includes crowd-sourced medical advice, what happens when a genuine question causes it to hallucinate?

While AI works wonderfully if everyone who uses it examines where it sourced its information from, many people – if not most people – aren’t going to do that.

And therein lies the key problem. As a writer, Overviews are already inherently a bit annoying because I want to read human-written content. But, even putting my pro-human bias aside, AI becomes seriously problematic if it’s so easily untrustworthy. And it’s become arguably downright dangerous now that it’s basically ubiquitous when searching, and a certain portion of users are going to take its info at face value.

I mean, years of searching has trained us all to trust the results at the top of the page.

Wait… is that’s true?

Like many people, I can sometimes struggle with change. I didn’t like it when LeBron went to the Lakers and I stuck with an MP3 player over an iPod for way too long.

However, given it’s now the first thing I see on Google most of the time, Google’s AI Overviews are a little harder to ignore.

I’ve tried using it like Wikipedia – potentially unreliable, but good for reminding me of forgotten info or for learning about the basics of a topic that won’t cause me any agita if it’s not 100% accurate.

Yet, even on seemingly simple queries it can fail spectacularly. As an example, I was watching a movie the other week and this guy really looked like Lin-Manuel Miranda (creator of the musical Hamilton), so I Googled whether he had any brothers.

The AI overview informed me that “Yes, Lin-Manuel Miranda has two younger brothers named Sebastián and Francisco.”

For a few minutes I thought I was a genius at recognising people… until a little bit of further research showed that Sebastián and Francisco are actually Miranda’s two children.

Wanting to give it the benefit of the doubt, I figured that it would have no issue listing quotes from Star Wars to help me think of a headline.

Fortunately, it gave me exactly what I needed. “Hello there!” and “It’s a trap!”, and it even quoted “No, I am your father” as opposed to the too-commonly-repeated “Luke, I am your father”.

Along with these legitimate quotes, however, it claimed Anakin had said “If I go, I go with a bang” before his transformation into Darth Vader.

I was shocked at how it could be so wrong… and then I started second-guessing myself. I gaslit myself into thinking I must be mistaken. I was so unsure that I triple checked the quote’s existence and shared it with the office – where it was quickly (and correctly) dismissed as another bout of AI lunacy.

This little piece of self-doubt, about something as silly as Star Wars scared me. What if I had no knowledge about a topic I was asking about?

This study by SE Ranking actually shows Google’s AI Overviews avoids (or cautiously responds to) topics of finance, politics, health and law. This means Google knows that its AI isn’t up to the task of more serious queries just yet.

But what happens when Google thinks it’s improved to the point that it can?

It’s the tech… but also how we use it

If everyone using Google could be trusted to double check the AI results, or click into the source links provided by the overview, its inaccuracies wouldn’t be an issue.

But, as long as there is an easier option – a more frictionless path – people tend to take it.

Despite having more information at our fingertips than at any previous time in human history, in many countries our literacy and numeracy skills are declining. Case in point, a 2022 study found that just 48.5% of Americans report having read at least one book in the previous 12 months.

It’s not the technology itself that’s the issue. As is eloquently argued by Associate Professor Grant Blashki, how we use the technology (and indeed, how we’re steered towards using it) is where problems arise.

For example, an observational study by researchers at Canada’s McGill University found that regular use of GPS can result in worsened spatial memory – and an inability to navigate on your own. I can’t be the only one that’s used Google Maps to get somewhere and had no idea how to get back.

Neuroscience has clearly demonstrated that struggling is good for the brain. Cognitive Load Theory states that your brain needs to think about things to learn. It’s hard to imagine struggling too much when you search a question, read the AI summary and then call it a day.

Make the choice to think

I’m not committing to never using GPS again, but given Google’s AI Overviews are regularly untrustworthy, I would get rid of AI Overviews if I could. However, there’s unfortunately no such method for now.

Even hacks like adding a cuss word to your query no longer work. (And while using the F-word still seems to work most of the time, it also makes for weirder and more, uh, ‘adult-oriented’ search results that you’re probably not looking for.)

Of course, I’ll still use Google – because it’s Google. It’s not going to reverse its AI ambitions anytime soon, and while I could wish for it to restore the option to opt-out of AI Overviews, maybe it’s better the devil you know.

Right now, the only true defence against AI misinformation is to make a concerted effort not to use it. Let it take notes of your work meetings or think up some pick-up lines, but when it comes to using it as a source of information, I’ll be scrolling past it and seeking a quality human-authored (or at least checked) article from the top results – as I’ve done for nearly my entire existence.

I mentioned previously that one day these AI tools might genuinely become a reliable source of information. They might even be smart enough to take on politics. But today isn’t that day.

In fact, as reported on May 5 by the New York Times, as Google and ChatGPT’s AI tools become more powerful, they’re also becoming increasingly unreliable – so I’m not sure I’ll ever be trusting them to summarise any political candidate’s policies.

When testing the hallucination rate of these ‘reasoning systems’, the highest recorded hallucination rate was a whopping 79%. Amr Awadalla, the chief executive of Vectara – an AI Agent and Assistant platform for enterprises – put it bluntly: “Despite our best efforts, they will always hallucinate.”