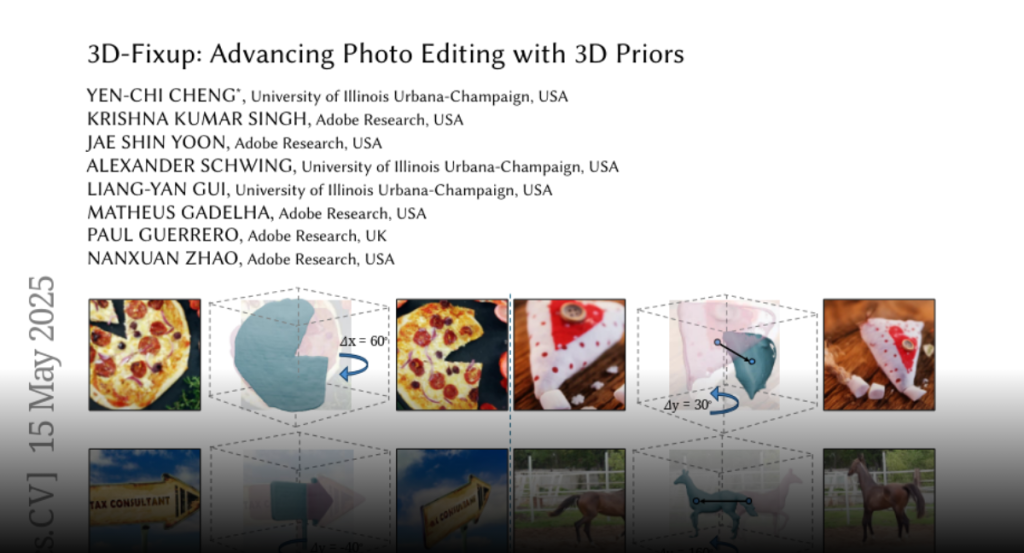

Despite significant advances in modeling image priors via diffusion models,

3D-aware image editing remains challenging, in part because the object is only

specified via a single image. To tackle this challenge, we propose 3D-Fixup, a

new framework for editing 2D images guided by learned 3D priors. The framework

supports difficult editing situations such as object translation and 3D

rotation. To achieve this, we leverage a training-based approach that harnesses

the generative power of diffusion models. As video data naturally encodes

real-world physical dynamics, we turn to video data for generating training

data pairs, i.e., a source and a target frame. Rather than relying solely on a

single trained model to infer transformations between source and target frames,

we incorporate 3D guidance from an Image-to-3D model, which bridges this

challenging task by explicitly projecting 2D information into 3D space. We

design a data generation pipeline to ensure high-quality 3D guidance throughout

training. Results show that by integrating these 3D priors, 3D-Fixup

effectively supports complex, identity coherent 3D-aware edits, achieving

high-quality results and advancing the application of diffusion models in

realistic image manipulation. The code is provided at

https://3dfixup.github.io/