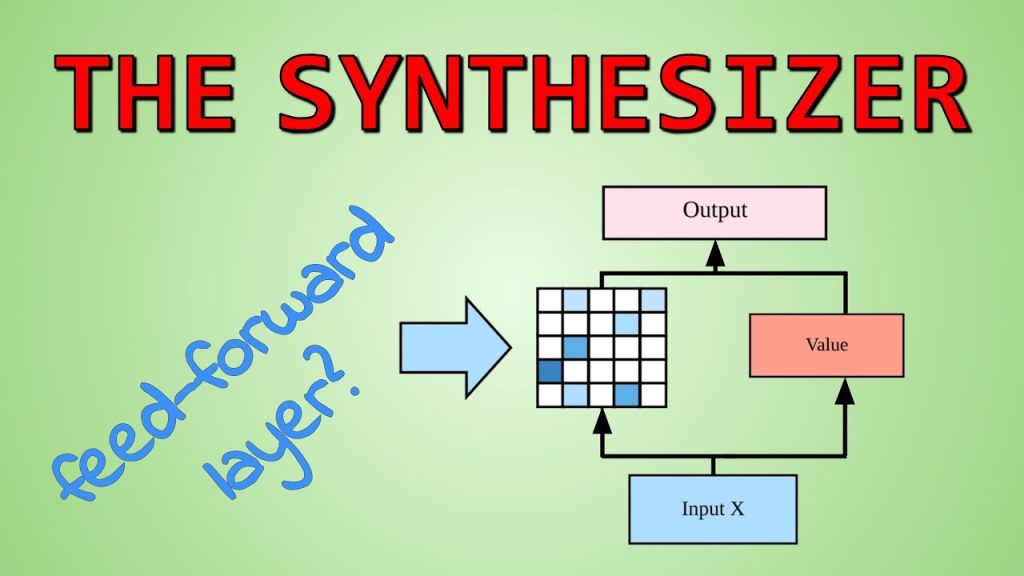

Do we really need dot-product attention? The attention mechanism is a central part of modern Transformers, mainly due to the dot-product attention mechanism. This paper changes the mechanism to remove the quadratic interaction terms and comes up with a new model, the Synthesizer. As it turns out, you can do pretty well like that!

OUTLINE:

0:00 – Intro & High Level Overview

1:00 – Abstract

2:30 – Attention Mechanism as Information Routing

5:45 – Dot Product Attention

8:05 – Dense Synthetic Attention

15:00 – Random Synthetic Attention

17:15 – Comparison to Feed-Forward Layers

22:00 – Factorization & Mixtures

23:10 – Number of Parameters

25:35 – Machine Translation & Language Modeling Experiments

36:15 – Summarization & Dialogue Generation Experiments

37:15 – GLUE & SuperGLUE Experiments

42:00 – Weight Sizes & Number of Head Ablations

47:05 – Conclusion

Paper:

My Video on Transformers (Attention Is All You Need):

My Video on BERT:

Abstract:

The dot product self-attention is known to be central and indispensable to state-of-the-art Transformer models. But is it really required? This paper investigates the true importance and contribution of the dot product-based self-attention mechanism on the performance of Transformer models. Via extensive experiments, we find that (1) random alignment matrices surprisingly perform quite competitively and (2) learning attention weights from token-token (query-key) interactions is not that important after all. To this end, we propose textsc{Synthesizer}, a model that learns synthetic attention weights without token-token interactions. Our experimental results show that textsc{Synthesizer} is competitive against vanilla Transformer models across a range of tasks, including MT (EnDe, EnFr), language modeling (LM1B), abstractive summarization (CNN/Dailymail), dialogue generation (PersonaChat) and Multi-task language understanding (GLUE, SuperGLUE).

Authors: Yi Tay, Dara Bahri, Donald Metzler, Da-Cheng Juan, Zhe Zhao, Che Zheng

Links:

YouTube:

Twitter:

BitChute:

Minds:

source