French AI startup Mistral AI has expanded its model lineup with Mistral Medium 3, an offering designed to hit a sweet spot between high-end performance and cost efficiency. Announced May 7, the model arrives alongside the general availability of Le Chat Enterprise, Mistral’s chatbot platform tailored for business use which entered private preview earlier this year.

Mistral positions Medium 3 as a new category balancing capability with accessibility; according to Mistral AI, Mistral Medium 3 introduces a new class of models that balances SOTA performance, 8X lower cost, simpler deployability to accelerate enterprise usage.

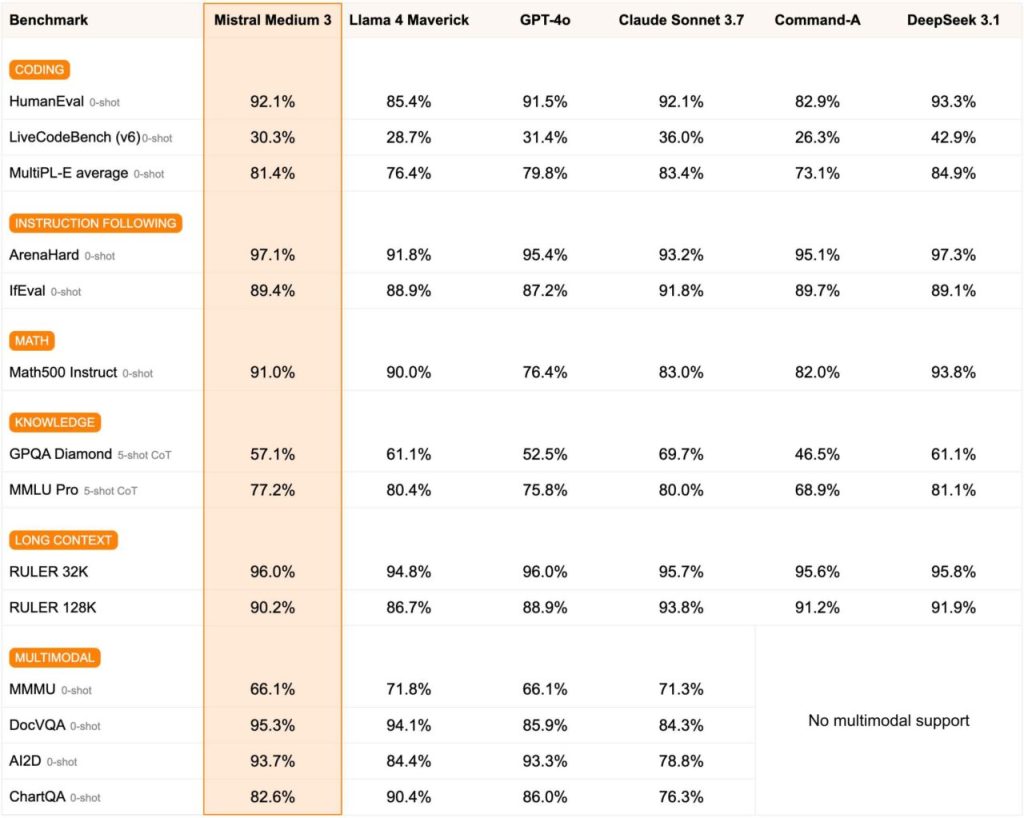

The company claims the model performs at or above 90% of Anthropic’s Claude Sonnet 3.7 across benchmarks, while its API pricing ($0.40/M input, $2/M output tokens) represents a significant cost reduction.

Mistral also asserts Medium 3 surpasses recent open models like Meta’s Llama 4 Maverick and enterprise options such as Cohere Command A, while being more economical than cost leaders like DeepSeek v3. “On pricing, the model beats cost leaders such as DeepSeek v3, both in API and self-deployed systems”, Mistral noted via a blog post reference.

Performance Claims and Deployment Options

According to Mistral’s evaluations, Medium 3 shows particular strength in coding and STEM tasks, approaching the capabilities of larger, slower competitors. The company also highlights its multimodal understanding: “The model leads in professional use cases such as coding and multimodal understanding”, stated Mistral AI. Third-party human evaluations reportedly corroborate strong coding performance.

Deployment flexibility is a key selling point. Mistral states Medium 3 can “be deployed on any cloud, including self-hosted environments of four GPUs and above”. This continues Mistral’s strategy seen in earlier releases like Mistral Small 3 and its successor Small 3.1, focusing on models that can operate efficiently without massive infrastructure, sometimes even on consumer hardware.

Enterprise Integration and Le Chat Enhancements

Mistral is emphasizing Medium 3’s suitability for business contexts. According to Mistral AI, “The model delivers a range of enterprise capabilities including: Hybrid or on-premises / in-VPC deployment, Custom post-training, Integration into enterprise tools and systems”.

The model supports hybrid and on-premises deployment and can be adapted through continuous pretraining, fine-tuning, and integration with enterprise knowledge bases via Mistral’s applied AI solutions. Beta tests are reportedly active in finance, energy, and healthcare for tasks like contextual customer service and complex data analysis.

Complementing the new model is the general release of Le Chat Enterprise. This platform offers tools like an AI agent builder and integrations with common business software such as Google Drive and SharePoint.

Notably, Mistral confirmed Le Chat Enterprise will soon support Anthropic’s MCP standard for connecting AI assistants to data systems, following similar commitments from Google and OpenAI. This builds on previous enhancements to Le Chat, which added features like web search and document analysis.

Availability, Context, and Future Hints

Mistral Medium 3 is available now through the company’s La Plateforme API and Amazon Sagemaker. Access via Microsoft Azure AI Foundry, Google Cloud Vertex, IBM WatsonX, and NVIDIA NIM is planned. Users needing customized deployments can contact Mistral directly.

This launch comes as Mistral, founded in 2023 and previously valued at $6 billion, moves towards a potential IPO. The company has raised over $1.1 billion and maintains a stance of independence, with CEO Arthur Mensch stating “We are not for sale,” via Bloomberg interview reference. Mistral has steadily released models across different sizes and capabilities, including its OCR API and the Small series.

Looking forward, Mistral teased another upcoming release, stating “With the launches of Mistral Small in March and Mistral Medium today, it’s no secret that we’re working on something ‘large’ over the next few weeks. and adding With even our medium-sized model being resoundingly better than flagship open source models such as Llama 4 Maverick, we’re excited to ‘open’ up what’s to come :)”, suggesting a larger model is imminent to compete further up the capability scale.