Several tech leaders are saying that most coding will be done by AI in a few years, but Google DeepMind has given some context on how that’ll actually happen.

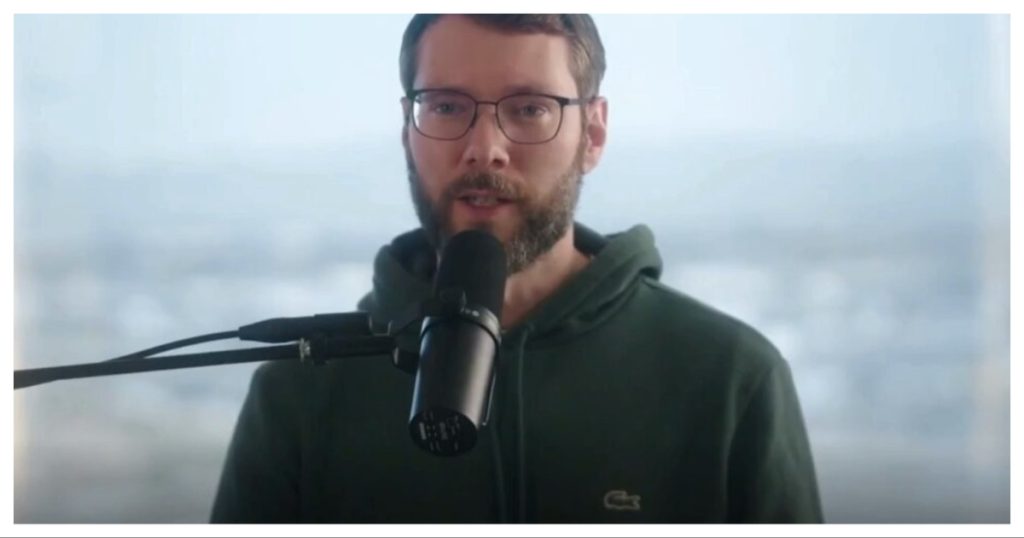

In a discussion, Google DeepMind research scientist Nikolay Savinov has outlined a fascinating roadmap for the future of coding, driven by exponentially increasing context windows in AI models. He predicts a near future with “superhuman coding systems” that will become indispensable tools for every coder, thanks to the advent of massive, readily available context windows. His insights offer a compelling glimpse into a world where AI doesn’t just assist with coding, but fundamentally transforms the process.

Savinov believes that once we achieve near-perfect million-token context windows, it will “unlock totally incredible applications” beyond our current imagination. The context window is the size of the input the model is able to ingest and process in one go. This initial leap in quality and retrieval capabilities will pave the way for decreased costs in longer context windows. “I think it will take maybe a little bit more time, but it’s going to happen,” he stated.

“As the cost decreases, the longer context also gets unlocked. So, I think reasonably soon we will see the 10 million context window,” Savinov predicted. He envisions this massive context becoming commonplace, a standard offering from AI providers.

“When this happens, that’s going to be a deal-breaker for some applications like coding,” he explained. “Because I think for one or two million, you can only fit small and medium-sized codebases in the context. But 10 million actually unlocks large coding projects to be included in the context completely.”

This, Savinov believes, will lead to the rise of “superhuman coding systems,” which will be “totally unrivaled.” These systems will become the new standard tool for every coder.

Looking even further ahead, Savinov addressed the possibility of 100 million context windows: “Well, it’s more debatable. I think it’s going to happen. I don’t know how soon it’s going to come. And I also think we will probably need more deep learning innovations to achieve this.”

Large context windows are crucial for use-cases like coding. If all of an application’s code can fit within the context window, the AI system can see all the code at once, and see dependencies among different parts of the code. This allows it to make changes to the code while keeping in mind how different parts of the code work together. A smaller context window, in contrast, means that the LLM can understand only a part of the code at once, and if it tries to fix it or change it, it could break other parts of the code which might be dependent on it. As such, when the AI system suggests changes in one part of the code, it can break other parts, and manually having to debug such code can often chip away at the advantages of using an AI system in the first place.

But if 10 million context windows become commonplace — top AI systems currently have context lengths of around 1 million — they would allow AI to comprehend and process entire codebases of complex software projects. This could revolutionize software development, enabling AI to generate code, debug, refactor, and even design entire systems with minimal human intervention. And it’s perhaps this upcoming increase in context window lengths that’s making companies ranging from Anthropic to Meta predict that most coding will be done by AI systems in the not-too-distant future.