Data scaling and standardized evaluation benchmarks have driven significant

advances in natural language processing and computer vision. However, robotics

faces unique challenges in scaling data and establishing evaluation protocols.

Collecting real-world data is resource-intensive and inefficient, while

benchmarking in real-world scenarios remains highly complex. Synthetic data and

simulation offer promising alternatives, yet existing efforts often fall short

in data quality, diversity, and benchmark standardization. To address these

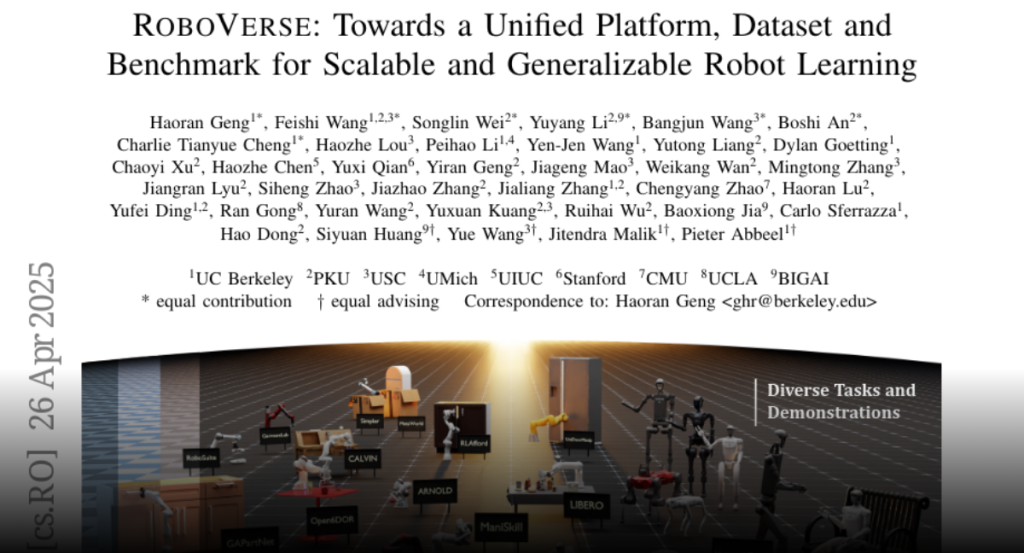

challenges, we introduce RoboVerse, a comprehensive framework comprising a

simulation platform, a synthetic dataset, and unified benchmarks. Our

simulation platform supports multiple simulators and robotic embodiments,

enabling seamless transitions between different environments. The synthetic

dataset, featuring high-fidelity physics and photorealistic rendering, is

constructed through multiple approaches. Additionally, we propose unified

benchmarks for imitation learning and reinforcement learning, enabling

evaluation across different levels of generalization. At the core of the

simulation platform is MetaSim, an infrastructure that abstracts diverse

simulation environments into a universal interface. It restructures existing

simulation environments into a simulator-agnostic configuration system, as well

as an API aligning different simulator functionalities, such as launching

simulation environments, loading assets with initial states, stepping the

physics engine, etc. This abstraction ensures interoperability and

extensibility. Comprehensive experiments demonstrate that RoboVerse enhances

the performance of imitation learning, reinforcement learning, world model

learning, and sim-to-real transfer. These results validate the reliability of

our dataset and benchmarks, establishing RoboVerse as a robust solution for

advancing robot learning.