Can we build accurate world models out of large language models (LLMs)? How

can world models benefit LLM agents? The gap between the prior knowledge of

LLMs and the specified environment’s dynamics usually bottlenecks LLMs’

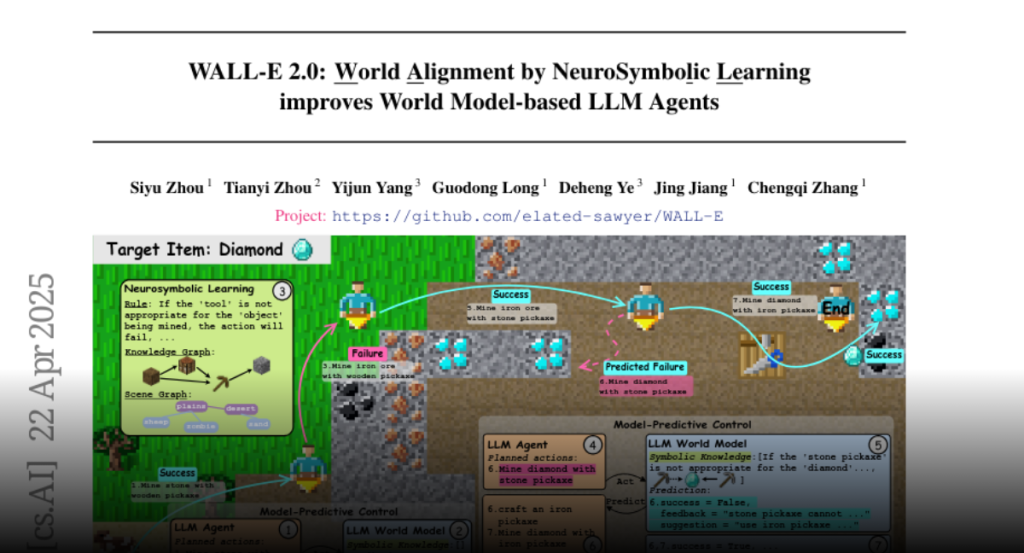

performance as world models. To bridge the gap, we propose a training-free

“world alignment” that learns an environment’s symbolic knowledge complementary

to LLMs. The symbolic knowledge covers action rules, knowledge graphs, and

scene graphs, which are extracted by LLMs from exploration trajectories and

encoded into executable codes to regulate LLM agents’ policies. We further

propose an RL-free, model-based agent “WALL-E 2.0” through the model-predictive

control (MPC) framework. Unlike classical MPC requiring costly optimization on

the fly, we adopt an LLM agent as an efficient look-ahead optimizer of future

steps’ actions by interacting with the neurosymbolic world model. While the LLM

agent’s strong heuristics make it an efficient planner in MPC, the quality of

its planned actions is also secured by the accurate predictions of the aligned

world model. They together considerably improve learning efficiency in a new

environment. On open-world challenges in Mars (Minecraft like) and ALFWorld

(embodied indoor environments), WALL-E 2.0 significantly outperforms existing

methods, e.g., surpassing baselines in Mars by 16.1%-51.6% of success rate and

by at least 61.7% in score. In ALFWorld, it achieves a new record 98% success

rate after only 4 iterations.