Recognizing and reasoning about occluded (partially or fully hidden) objects

is vital to understanding visual scenes, as occlusions frequently occur in

real-world environments and act as obstacles for spatial comprehension. To test

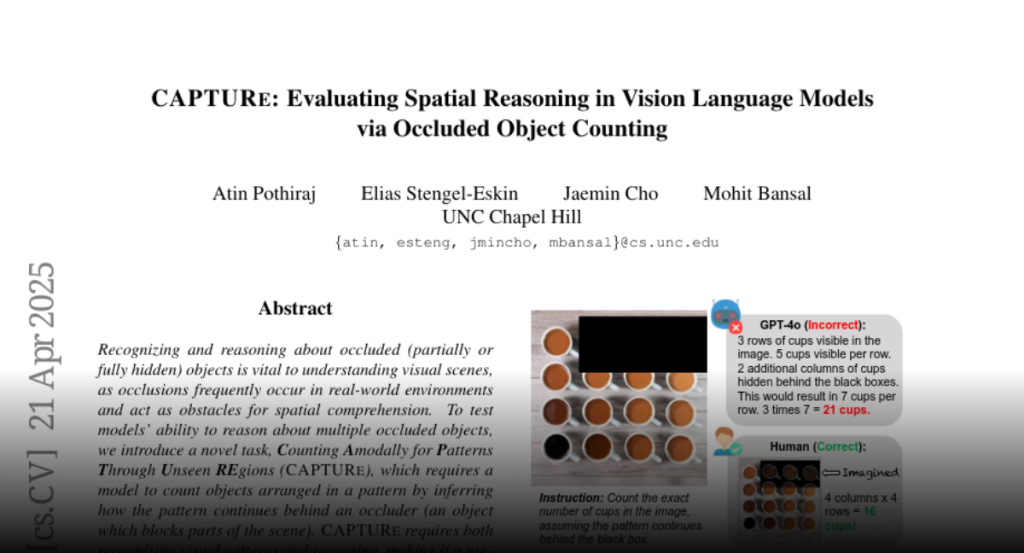

models’ ability to reason about multiple occluded objects, we introduce a novel

task, Counting Amodally for Patterns Through Unseen REgions (CAPTURe), which

requires a model to count objects arranged in a pattern by inferring how the

pattern continues behind an occluder (an object which blocks parts of the

scene). CAPTURe requires both recognizing visual patterns and reasoning, making

it a useful testbed for evaluating vision-language models (VLMs) on whether

they understand occluded patterns and possess spatial understanding skills. By

requiring models to reason about occluded objects, CAPTURe also tests VLMs’

ability to form world models that would allow them to fill in missing

information. CAPTURe consists of two parts: (1) CAPTURe-real, with manually

filtered images of real objects in patterns and (2) CAPTURe-synthetic, a

controlled diagnostic with generated patterned images. We evaluate four strong

VLMs (GPT-4o, Intern-VL2, Molmo, and Qwen2-VL) on CAPTURe, finding that models

struggle to count on both occluded and unoccluded patterns. Crucially, we find

that models perform worse with occlusion, suggesting that VLMs are also

deficient in inferring unseen spatial relationships: even the strongest VLMs

like GPT-4o fail to count with occlusion. In contrast, we find that humans

achieve very little error on CAPTURe. We also find that providing auxiliary

information of occluded object locations increases performance, underscoring

that the model error comes both from an inability to handle occlusion as well

as difficulty counting in images.