Peng Sun1,2* ·

Xufeng Li1,3 ·

Tao Lin1†

1 Westlake University

2 Zhejiang University

3 Nanjing University

* These authors contributed equally. † Corresponding author.

[arXiv] [Project Page]

Summary:

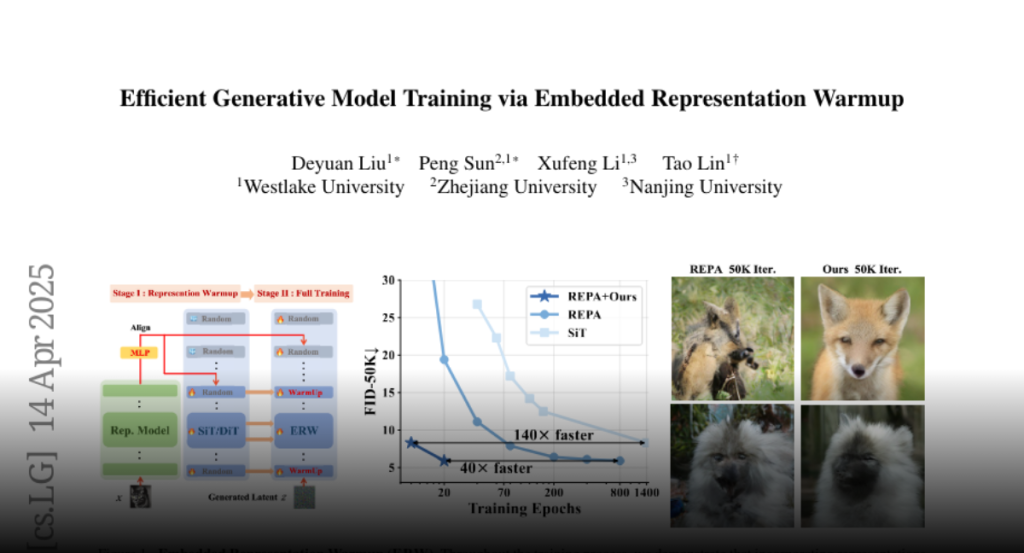

Diffusion models have made impressive progress in generating high-fidelity images. However, training them from scratch requires learning both robust semantic representations and the generative process simultaneously. Our work introduces Embedded Representation Warmup (ERW) – a plug-and-play two-phase training framework that:

Phase 1 – Warmup: Initializes the early layers of the diffusion model with high-quality, pretrained visual representations (e.g., from DINOv2 or other self-supervised encoders).

Phase 2 – Full Training: Continues with standard diffusion training while gradually reducing the alignment loss, so the model can focus on refining generation.

🔥 News

(🔥 New) [2025/4/15] 🔥ERW code & weights are released! 🎉 Include: Training & Inference code and Weights in HF are all released.

Acknowledgement

This code is mainly built upon REPA, LightningDiT, DiT, SiT, edm2, and RCG repositories.

BibTeX

@misc

{liu2025efficientgenerativemodeltraining,

title={Efficient Generative Model Training via Embedded Representation Warmup},

author={Deyuan Liu and Peng Sun and Xufeng Li and Tao Lin},

year={2025},

eprint={2504.10188},

archivePrefix={arXiv},

primaryClass={cs.LG},

url={https://arxiv.org/abs/2504.10188},

}