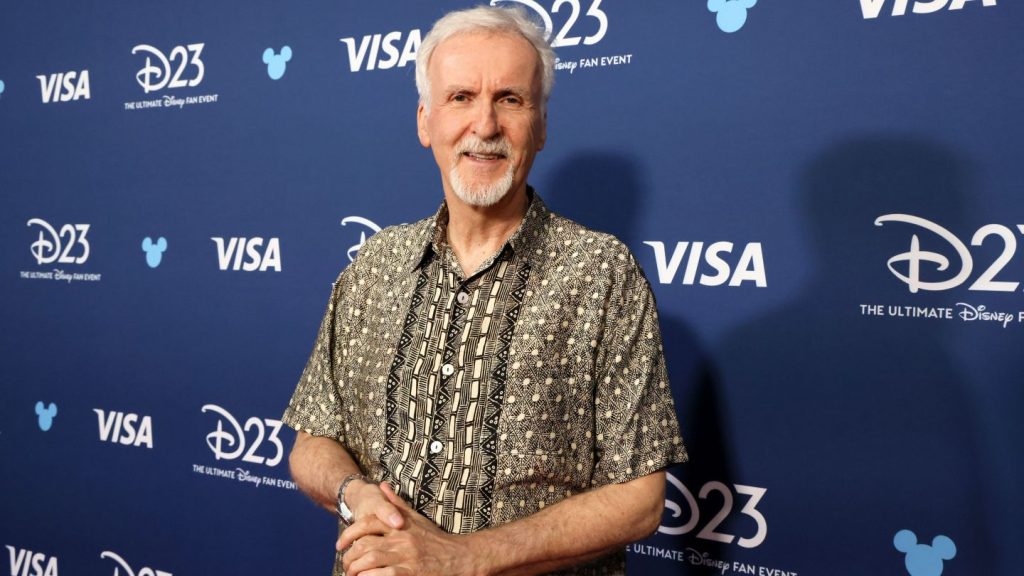

James Cameron, the Oscar-winning director of films like Avatar, Terminator and Titanic, appears cautiously optimistic about the role generative AI can play in filmmaking, though even if he is wary of the “in the style of” prompts that have proliferated after images in the style of Studio Ghibli flooded the internet over the past few weeks.

“I think we should discourage the text prompt that says, ‘in the style of James Cameron,’ or ‘in the style of Zack Snyder,’” Cameron said on a podcast Wednesday, adding that “makes me a little bit queasy.”

And yet, Cameron acknowledges that the ability to create content that mimics great talents is undeniably interesting, and mirrors what he himself does in his own head.

“I aspire to be in the style of Ridley Scott, in the style of Stanley Kubrick. That’s my text prompt that runs in my head as a filmmaker,” Cameron said. “In the style of George Miller: wide lens, low, hauling ass, coming up into a tight close up. Yeah, I want to do that. I know my influences. Everybody knows their influences.”

Cameron was a guest on Boz to the Future, the podcast hosted by Andrew Bosworth, the CTO of the technology giant Meta. The latest episode, which dropped Wednesday, featured an extensive conversation about generative AI, with Cameron sounding optimistic about its use in special effects, and uncertain whether studios, tech giants and legislators should focus on regulating the inputs to the AI models, or the outputs.

Cameron, of course, joined the board of AI firm Stability AI last year. Stability is the company behind the Stable Diffusion image model.

“In the old days, I would have founded a company to figure it out. I’ve learned maybe that’s not the best way to do it. So I thought, ‘All right, I’ll join the board of a good, competitive company that’s got a good track record,’” Cameron said of the decision. “My goal was not necessarily to make a shit pile of money. The goal was to understand the space, to understand what’s on the minds of the developers. What are they targeting? What’s their development cycle? How much resources you have to throw at it to create a new model that does a purpose-built thing, and my goal was to try to integrate it into a VFX workflow.

“And it’s not just hypothetical, if we want to continue to see the kinds of movies that I’ve always loved and that I like to make and that I will go to see — call it Dune, Dune Two, something like that, or one of my films, or big effects-heavy, CG-heavy films — we’ve got to figure out how to cut the cost of that in half,” he continued. “Now that’s not about laying off half the staff and at the effects company. That’s about doubling their speed to completion on a given shot, so your cadence is faster and your throughput cycle is faster, and artists get to move on and do other cool things and then other cool things, right? That’s my sort of vision for that.”

When it comes to the controversial question of “training” AI models, Cameron seemed to suggest that regulators and lawyers should be more focused on the output of AI programs and tech, rather than the inputs and training data.

“A lot of the a lot of the hesitation in Hollywood and entertainment in general, are issues of the source material for the training data, and who deserves what, and copyright protection and all that sort of thing. I think people are looking at it all wrong,” Cameron told Bosworth. “I’m an artist. Anybody that’s an artist, anybody that’s a human being, is a model. You’re a model already, you’ve got a three and a half pound meat computer.

“We’re models moving through space and time and reacting based on our training data,” he continued. “So my point is, as a screenwriter, as a filmmaker, if I exactly copy Star Wars, I’ll get sued. Actually, I won’t even get that far. Everybody’ll say, ‘Hey, it’s too much like Star Wars, we’re going to get sued now.’ I won’t even get the money. And as a screenwriter, you have a kind of built-in ethical filter that says, ‘I know my sources, I know what I liked, I know what I’m emulating.’ I also know that I have to move it far enough away that it’s my own independent creation. So I think the whole thing needs to be managed from a legal perspective, as to what’s the output, not what’s the input. You can’t control my input, you can’t tell me what to view and what to see and where to go. My input is whatever I choose it to be, and whatever has accumulated throughout my life. My output, every script I write, should be judged on whether it’s too close, too plagiaristic, whatever.”

Instead, Cameron outlines a vision where more focused AI products help filmmakers create their visions more fully, arguing that AI giants like OpenAI and, yes, even Meta, are not really competing for Hollywood’s business.

“You look at OpenAI, their goal is not to make gen AI movies. I mean, we’re a little wart on their butt, I mean in terms of the scale you’re talking about, they want to make consumer products for 8 billion people,” Cameron said. “And I’m sure Meta is very much the same … movies are just a little, tiny application, a tiny use case. That’s the problem. So it’s going to be smaller, sort of boutique-type gen AI developer groups that I can get the attention of and say, ‘Hey, I’ve got a problem here. It’s called rotoscope.’”

Cameron is currently working on the next Avatar film, Avatar: Fire and Ash, which will be released in December, and reportedly will include a title card noting that no gen AI was used in creating the film.