Recent progress in diffusion models significantly advances various image

generation tasks. However, the current mainstream approach remains focused on

building task-specific models, which have limited efficiency when supporting a

wide range of different needs. While universal models attempt to address this

limitation, they face critical challenges, including generalizable task

instruction, appropriate task distributions, and unified architectural design.

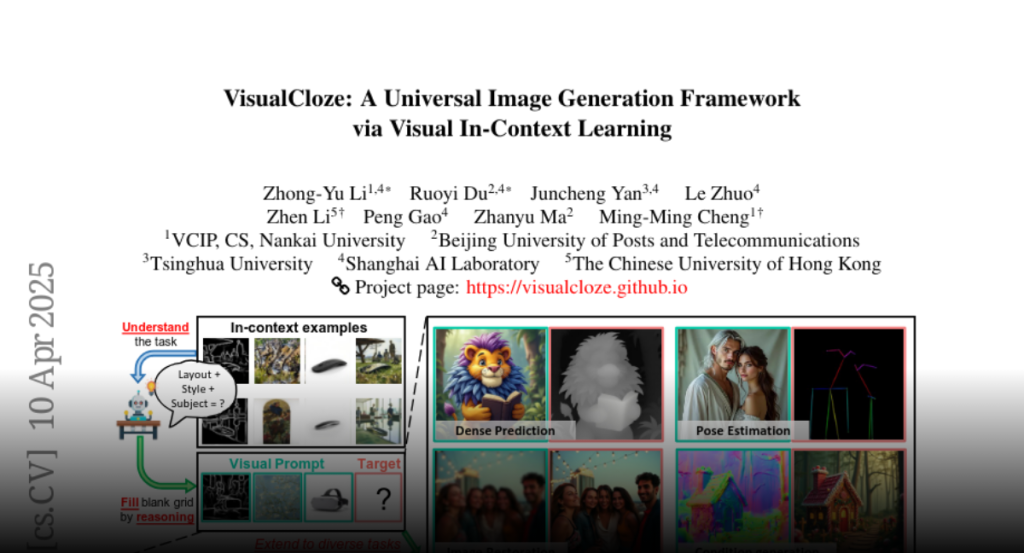

To tackle these challenges, we propose VisualCloze, a universal image

generation framework, which supports a wide range of in-domain tasks,

generalization to unseen ones, unseen unification of multiple tasks, and

reverse generation. Unlike existing methods that rely on language-based task

instruction, leading to task ambiguity and weak generalization, we integrate

visual in-context learning, allowing models to identify tasks from visual

demonstrations. Meanwhile, the inherent sparsity of visual task distributions

hampers the learning of transferable knowledge across tasks. To this end, we

introduce Graph200K, a graph-structured dataset that establishes various

interrelated tasks, enhancing task density and transferable knowledge.

Furthermore, we uncover that our unified image generation formulation shared a

consistent objective with image infilling, enabling us to leverage the strong

generative priors of pre-trained infilling models without modifying the

architectures.