The use of artificial intelligence (AI) to generate synthetic voices has expanded across many applications, from virtual assistants to audiobook narration. However, AI voices often sound noticeably robotic and emotionless compared to human voices.

By applying key audio adjustments, AI voice quality can be enhanced to sound more natural and human-like. This article provides 5 straightforward audio editing tips for reducing the “robotic effect” of AI-generated voices, aiming to improve user experience.

Understanding the Reasons Behind Robotic Sound

Some primary factors contributing to sterile and robotic vocal quality in certain AI voices include:

Stilted, uneven pace – With less natural rhythm and pacing, sentences can sound choppy and unnatural. This fatigues listeners over time.

Lack of inflection and emotion – Monotone, robotic voices fail to convey appropriate emotion and personality for the context, distance users.

Improper pronunciation – Inaccurate pronunciation of words undermines credibility and clarity. Names and uncommon words reveal a lack of fluency.

Synthesised audio artefacts – The mechanical nature of generated voices includes audio telltales revealing their AI origins to careful listeners.

Tips to Make AI-Generated Voice Sound Less Robotic

Tip 1: Adjusting Speech Rate

Modifying the baseline speech rate makes a significant impact towards sounding more human. Speaking slower for clarity allows words and sentence spaces to land without blurring together at unnatural speeds. This improves intelligibility substantially.

Quickening speech for urgency conveys desired emotions when appropriate through brisk, attentive pacing. You can also add slight pacing imperfections to introduce more lifelike rhythm by avoiding machine-perfect tempo. Subtle, naturalistic deviations aid realism. Tuning output speeds avoids both lagging and rushed delivery styles for sustainable engagement. Rate should flex to match content needs.

Tip 2: Using Natural Pauses

Inserting thoughtful pauses makes AI-generated speech flow conversationally. Pausing between sentences gives ideas of separation instead of blending together into confusion, supporting clarity. When you take a pause between topic changes, it provides contextual transitions to explain the implied shifts in direction to listeners.

Varying pause duration can also sustain interest by avoiding metronomic timing. Both quick and sustained pauses have utility. These evidence-based techniques limit perception of disembodied “robo-speech” by introducing space and gravity. The right pacing invites active listening critical for comprehension.

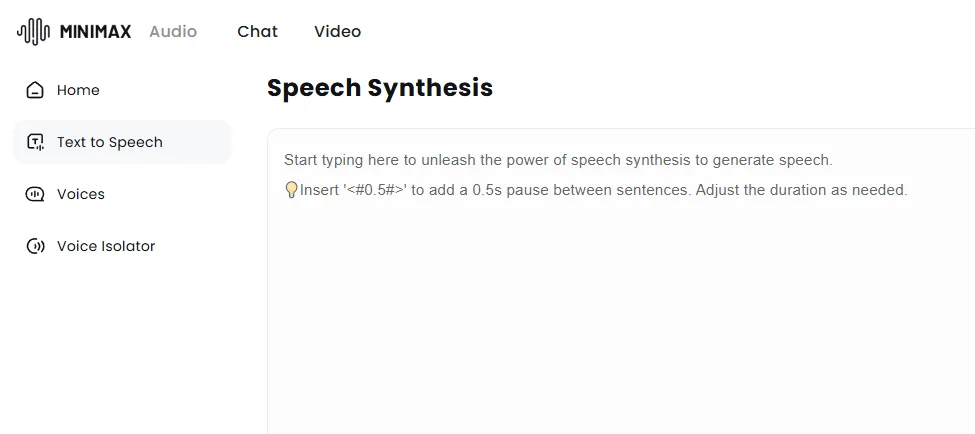

You can check out the speech synthesis tool by Minimax AI audio to add varying pauses to your AI-generated voice using simple commands while you provide text prompts.

Tip 3: Enhancing Emotion and Tone

The latest tools like Minimax allows you to adjust vocal tone and emotion and provide your AI voices more relatable qualities. Shifting emotional tones to match content themes helps conversations resonate better. Somber topics warrant sincerity over peppiness. Increasing variation across tone, pitch and volume makes for lively, compelling speech that sounds eagerly human rather than identically repeated.

The voice profile options targeting specific qualities like warmth and enthusiasm boosts relatability, excitement and fun. Voices shouldn’t default to stark neutrality. Even basic emotional variation goes a long way toward improving user bonding and enjoyment of interactions.

Tip 4: Utilising Pronunciation and Intonation Features

When you correct technical speech errors, it has a big impact on perceived quality. Fixing mispronounced names shows extra care while supporting branding efforts. Records custom enunciations to input. Refining problematic word pronunciations resolves comprehension issues caused by speaking uncommon terms incorrectly. Emphasis highlights important text via pronounced shifts in vocal inflection on desired words or phrases to steer listeners.

Tip 5: Experimenting with Different Voice Options

Explore alternative synthetic voice models to unlock greater realism based on use case nuances. Trying multiple language options ensures cultural specificity can shine through instead of defaulting only to English. Representation matters. Seeking additional voice profiles and ages expands the range of vocal tones and distinctive personality available. The voice you use should match the case needed.

Try specialty voices to add unique character for applications benefiting from non-generic vocal signatures with built-in warmth. By avoiding over-reliance on a singular default voice lacking emotional range, ensures variety upfront and allows cherry-picking the most fitting vocal profile per project.

Real-World Applications of Improved AI Voices

Refined synthetic voices open doors across industries. Warm, polished AI voices boost customer service through clearer articulation and conveyed empathy during inquiries. More relatable AI narration enhances audiobooks and tutorials using intonation highlighting moments of drama, humour and connections with listeners. Vocal accessibility applications provide news, entertainment and educational materials customised to assist visual, reading and other impairments.

With Optimised AI voices, you can create positive ripple effects to improve communication, connection and understanding as adoption spreads.

Conclusion

As mentioned, small changes in settings greatly help in the effort to eliminate the “robotic voice” sound that frequently harms AI voice systems and programs. We encourage creators to implement these basic editing techniques of pacing, pausing, emotion, precision and vocal range to enhance audience receptivity.

With persistent testing and sensitivity to improving vocal subtlety, future AI voices look to transform expectations of machine capabilities thanks to steady reductions in “uncanny valley” effects