“Who controls the past,’ ran the Party slogan, ‘controls the future: who controls the present controls the past.’”

— 1984, George Orwell

In February, I wrote an essay here called Elon Musk’s terrifying vision for AI, warning that the “the richest man in the world [was trying to build] a Large Language Model that spouts propaganda in his image”.

But he couldn’t get the vision to work. He had hoped Grok would say stuff like this, echoing his own beliefs.

But the Grok output in the tweet here appears in hindsight to have been Elon’s fantasy, rather than Grok’s reality. Nobody could actually replicate it. Here’s what I got from Grok 3 this morning:

This output comes much closer to the consensus view, rather than Elon’s view.

It turns out that, thus far, the major LLMs haven’t been that different from one another, as multiple studies have shown. Almost every LLM, even his own, could be argued, for example, to have a slight liberal bias. Probably none of them would (without special prompting) rant on The Information the way that Elon did in February.

No LLM is fully coherent in it’s “beliefs”, because by nature they parrot a variety conflicting things from their training, which is itself inconsistent, rather than reasoning their way a coherent view the way a rational person might. But other things being equal their “opinions” tend to be bland and middle of the road.

Why do so many models tend to cluster not far from the middle? Necessity has (heretofore) meant that pretty much every recent LLM has been trained on more less the same data. (Why? LLMs are desperately greedy for data, so pretty much everyone has used every bit of data they can scrape from the internet, and there is only one internet.)

Short of using some fine-tuning tricks that likely reduce accuracy, it’s actually pretty hard to make an LLM that stands out from the pack – if you use the same data as everyone else. Grok is maybe a little closer to the center, but hardly as far right as Mr. Musk himself is.

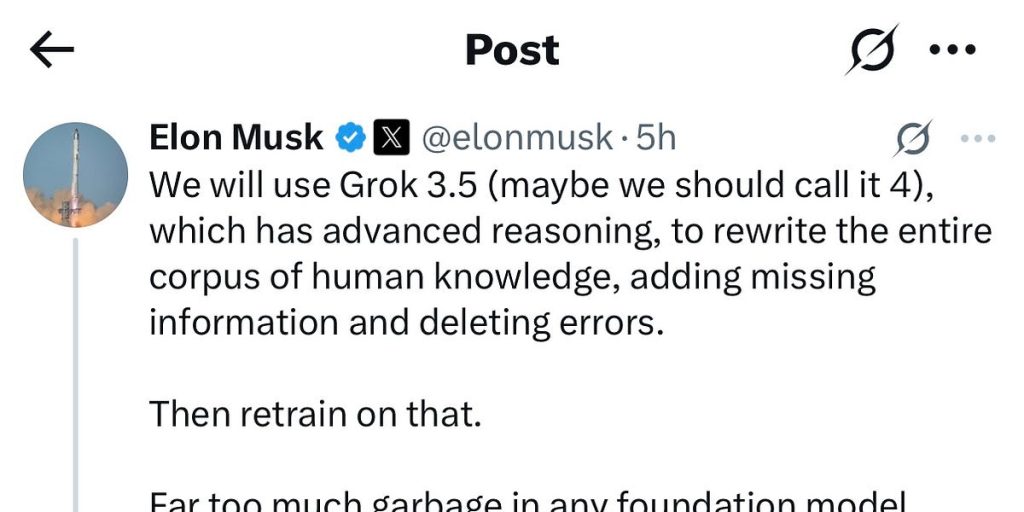

Unfortunately, Musk seems finally to have figured this out. A bit closer to the center isn’t going to satisfy him, so he hit on a different technique to achieve the effect that he wants. He is going to rewrite the data:

Which is to say he is going to rewrite history. Straight out of 1984.

He couldn’t get Grok to align with his own personal beliefs so he is going to rewrite history to make it conform to his views.

§

Elon Musk couldn’t control Washington, so now he is going to try to control your mind instead.

If you don’t think Altman and Zuckerberg are going to try to the same, you aren’t paying attention. Together with Jony Ive, Altman is apparently aiming to build a smart necklace or similar, presumably with 24/7 access to everything you say — and to make his LLMs, which he can shape as he pleases, your constant companion. Surveillance tools Orwell himself barely dreamed of.

Zuckerberg, meanwhile, is spending his billions trying to make AI to his own liking (and trying his best to hire Altman’s best staff away, apparently offering $100 million annual salaries to some). The Chinese government has already made conformity to their Party line a legal requirement; Putin is probably on it, and it’s not inconceivable that the present US government might pressure companies to do the same.

LLMs may not be AGI, but they could easily become the most potent form of mind control ever invented.

Gary Marcus is terrified of what is to come, and doesn’t know whether democracy as we knew it will survive.