#inftyformer #infinityformer #transformer

Vanilla Transformers are excellent sequence models, but suffer from very harsch constraints on the length of the sequences they can process. Several attempts have been made to extend the Transformer’s sequence length, but few have successfully gone beyond a constant factor improvement. This paper presents a method, based on continuous attention mechanisms, to attend to an unbounded past sequence by representing the past as a continuous signal, rather than a sequence. This enables the Infty-Former to effectively enrich the current context with global information, which increases performance on long-range dependencies in sequence tasks. Further, the paper presents the concept of sticky memories, which highlight past events that are of particular importance and elevates their representation in the long-term memory.

OUTLINE:

0:00 – Intro & Overview

1:10 – Sponsor Spot: Weights & Biases

3:35 – Problem Statement

8:00 – Continuous Attention Mechanism

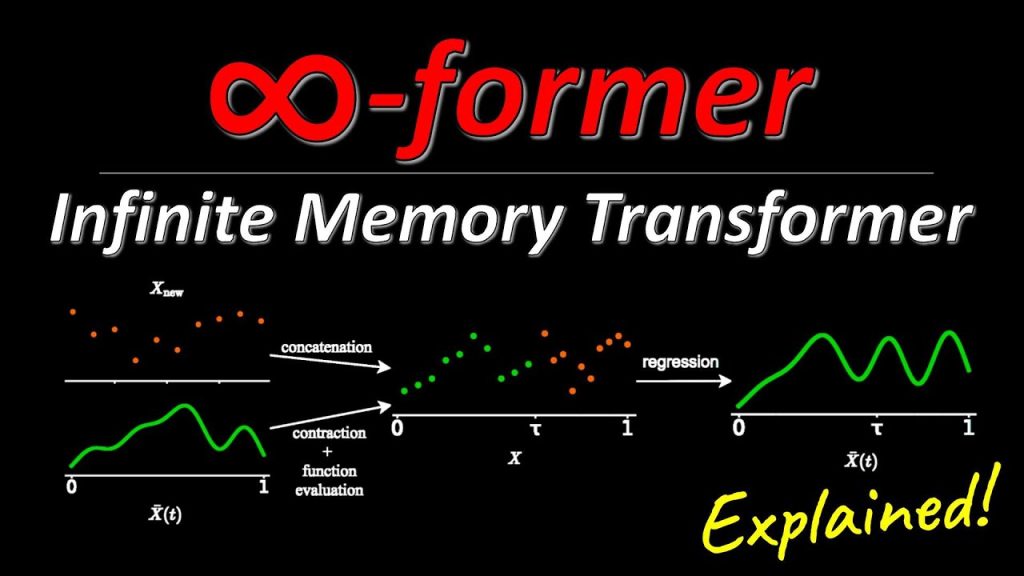

16:25 – Unbounded Memory via concatenation & contraction

18:05 – Does this make sense?

20:25 – How the Long-Term Memory is used in an attention layer

27:40 – Entire Architecture Recap

29:30 – Sticky Memories by Importance Sampling

31:25 – Commentary: Pros and cons of using heuristics

32:30 – Experiments & Results

Paper:

Sponsor: Weights & Biases

Abstract:

Transformers struggle when attending to long contexts, since the amount of computation grows with the context length, and therefore they cannot model long-term memories effectively. Several variations have been proposed to alleviate this problem, but they all have a finite memory capacity, being forced to drop old information. In this paper, we propose the ∞-former, which extends the vanilla transformer with an unbounded long-term memory. By making use of a continuous-space attention mechanism to attend over the long-term memory, the ∞-former’s attention complexity becomes independent of the context length. Thus, it is able to model arbitrarily long contexts and maintain “sticky memories” while keeping a fixed computation budget. Experiments on a synthetic sorting task demonstrate the ability of the ∞-former to retain information from long sequences. We also perform experiments on language modeling, by training a model from scratch and by fine-tuning a pre-trained language model, which show benefits of unbounded long-term memories.

Authors: Pedro Henrique Martins, Zita Marinho, André F. T. Martins

Links:

TabNine Code Completion (Referral):

YouTube:

Twitter:

Discord:

BitChute:

Minds:

Parler:

LinkedIn:

BiliBili:

If you want to support me, the best thing to do is to share out the content 🙂

If you want to support me financially (completely optional and voluntary, but a lot of people have asked for this):

SubscribeStar:

Patreon:

Bitcoin (BTC): bc1q49lsw3q325tr58ygf8sudx2dqfguclvngvy2cq

Ethereum (ETH): 0x7ad3513E3B8f66799f507Aa7874b1B0eBC7F85e2

Litecoin (LTC): LQW2TRyKYetVC8WjFkhpPhtpbDM4Vw7r9m

Monero (XMR): 4ACL8AGrEo5hAir8A9CeVrW8pEauWvnp1WnSDZxW7tziCDLhZAGsgzhRQABDnFy8yuM9fWJDviJPHKRjV4FWt19CJZN9D4n

source

3 Comments

Привет всем!

Долго не мог уяснить как поднять сайт и свои проекты и нарастить ИКС Яндекса и узнал от успещных seo,

профи ребят, именно они разработали недорогой и главное top прогон Xrumer – https://www.bing.com/search?q=bullet+%D0%BF%D1%80%D0%BE%D0%B3%D0%BE%D0%BD

Линкбилдинг для начинающих требует базовых знаний и инструментов. Xrumer автоматизирует размещение ссылок. Массовый прогон ускоряет рост DR. Чем больше качественных ссылок, тем выше позиции. Линкбилдинг для начинающих – первый шаг к успешному SEO.

бгпу сео, seo для статей, Как собрать базу для Xrumer

курсы линкбилдинг, сайт анализатор seo, раскрутка сайта фотографиями

!!Удачи и роста в топах!!

Today, I went to the beach with my children. I found a sea shell and gave it to my 4 year

old daughter and said “You can hear the ocean if you put this to your ear.” She placed the shell to her ear

and screamed. There was a hermit crab inside and it pinched her ear.

She never wants to go back! LoL I know this is totally off topic but I had to

tell someone!

شركة عزل اسطح بالدمام